Blog Archives

Do you need sysadmin rights to backup a database?

Looks like a silly question, doesn’t it? – Well, you would be surprised to know it’s not.

Looks like a silly question, doesn’t it? – Well, you would be surprised to know it’s not.

Obviously, you don’t need to be a sysadmin to simply issue a BACKUP statement. If you look up the BACKUP statement on BOL you’ll see in the “Security” section that

BACKUP DATABASE and BACKUP LOG permissions default to members of the sysadmin fixed server role and the db_owner and db_backupoperator fixed database roles.

But there’s more to it than just permissions on the database itself: in order to complete successfully, the backup device must be accessible:

[…] SQL Server must be able to read and write to the device; the account under which the SQL Server service runs must have write permissions. […]

While this statement sound sensible or even obvious when talking about file system devices, with other types of device it’s less obvious what “permissions” means. With other types of device I mean tapes and Virtual Backup Devices. Since probably nobody uses tapes directly anymore, basically I’m referring to Virtual Backup Devices.

VDI (Virtual Backup device Interface) is the standard API intended for use by third-party backup software vendors to perform backup operations. Basically, it allows an application to act as a storage device.

The VDI specification is available here (you just need the vbackup.chm help file contained in the self-extracting archive).

If you download and browse the documentation, under the “Security” topic, you will find a worrying statement:

The server connection for SQL Server that is used to issue the BACKUP or RESTORE commands must be logged in with the sysadmin fixed server role.

Wait, … what???!?!??!! Sysadmin???????

Sad but true, sysadmin is the only way to let an application take backups using the VDI API. There is no individual permission you can grant: it’s sysadmin or nothing.

Since most third-party backup sofwares rely on the VDI API, this looks like a serious security flaw: every SQL Server instance around the world that uses third-party backup utilities has a special sysadmin login used by the backup tool, or, even worse, the tool runs under the sa login.

In my opinion, this is an unacceptable limitation and I would like to see a better implementation in a future version, so I filed a suggestion on Connect.

If you agree with me, feel free to upvote and comment it.

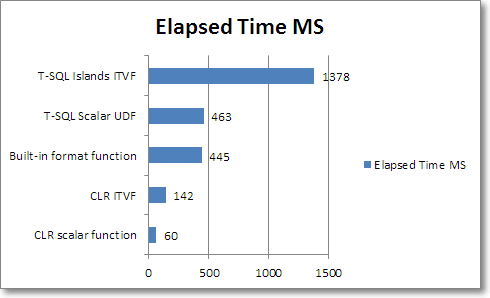

SQL Server and Custom Date Formats

Today SQL Server Central is featuring my article Dealing with custom date formats in T-SQL.

There’s a lot of code on that page and I thought that making it available for download would make it easier to play with.

You can download the code from this page or from the Code Repository.

- CustomDateFormat.cs

- formatDate_Islands_iTVF.sql

- formatDate_Recursive_iTVF.sql

- formatDate_scalarUDF.sql

- parseDate_Islands_iTVF.sql

I hope you enjoy reading the article as much as I enjoyed writing it.

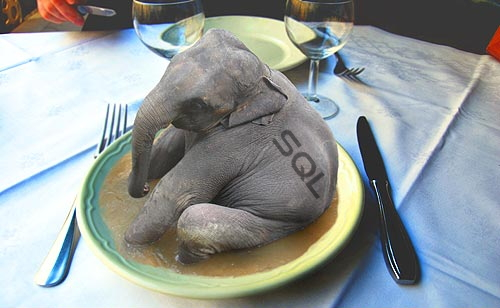

How to Eat a SQL Elephant in 10 Bites

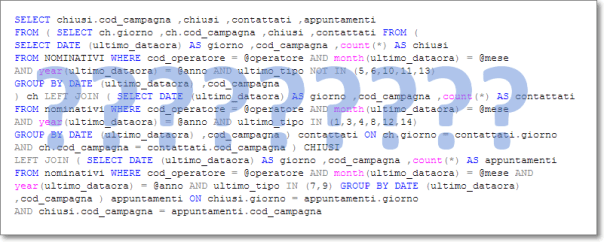

Sometimes, when you have to optimize a poor performing query, you may find yourself staring at a huge statement, wondering where to start.

Some developers think that a single elephant statement is better than multiple small statements, but this is not always the case.

Let’s try to look from the perspective of software quality:

- Efficiency

The optimizer will likely come up with a suboptimal plan, giving up early on optimizations and transformations. - Reliability

Any slight change in statistics could lead the optimizer to produce a different and less efficient plan. - Maintainability

A single huge statement is less readable and maintainable than multiple small statements.

With those points in mind, the only sensible thing to do is cut the elephant into smaller pieces and eat them one at a time.

This is how I do it:

- Lay out the original code and read the statement carefully

- Decide whether a full rewrite is more convenient

- Set up a test environment

- Identify the query parts

- Identify the main tables

- Identify non correlated subqueries and UNIONs

- Identify correlated subqueries

- Write a query outline

- Break the statement into parts with CTEs, views, functions and temporary tables

- Merge redundant subqueries

- Put it all together

- Verify the output based on multiple different input values

- Comment your work thoroughly

1. Lay out the original code and read the statement carefully

Use one of the many SQL formatters you can find online. My favorite one is Tao Klerk’s Poor Man’s T-SQL Formatter: it’s very easy to use and configure and it comes with a handy SSMS add-in and plugins for Notepad++ and WinMerge. Moreover, it’s free and open source. A must-have.

Once your code is readable, don’t rush to the keyboard: take your time and read it carefully.

- Do you understand (more or less) what it is supposed to do?

- Do you think you could have coded it yourself?

- Do you know all the T-SQL constructs it contains?

If you answered “yes” to all the above, you’re ready to go to the next step.

2. Decide whether a full rewrite is more convenient

OK, that code sucks and you have to do something. It’s time to make a decision:

- Take the business rules behind the statement and rewrite it from scratch

When the statement is too complicated and unreadable, it might be less time-consuming to throw the old statement away and write your own version.

Usually it is quite easy when you know exactly what the code is supposed to do. Just make sure you’re not making wrong assumptions and be prepared to compare your query with the original one many times. - Refactor the statement

When the business rules are unclear (or unknown) starting from scratch is not an option. No, don’t laugh! The business logic may have been buried in the sands of time or simply you may be working on a query without any will to understand the business processes behind it.

Bring a big knife: you’re going to cut the elephant in pieces. - Leave the statement unchanged

Sometimes the statement is too big or too complicated to bother taking the time to rewrite it. For instance, this query would take months to rewrite manually.

It works? Great: leave it alone.

3. Set up a test environment

It doesn’t matter how you decide to do it: at the end of the day you will have to compare the results of your rewritten query with the results of the “elephant” and make sure you did not introduce errors in your code.

The best way to do this is to prepare a script that compares the results of the original query with the results of your rewritten version. This is the script I am using (you will find it in the code repository, as usual).

-- =============================================

-- Author: Gianluca Sartori - spaghettidba

-- Create date: 2012-03-14

-- Description: Runs two T-SQL statements and

-- compares the results

-- =============================================

-- Drop temporary tables

IF OBJECT_ID('tempdb..#original') IS NOT NULL

DROP TABLE #original;

IF OBJECT_ID('tempdb..#rewritten') IS NOT NULL

DROP TABLE #rewritten;

-- Store the results of the original

-- query into a temporary table

WITH original AS (

<original, text, >

)

SELECT *

INTO #original

FROM original;

-- Add a sort column

ALTER TABLE #original ADD [______sortcolumn] int identity(1,1);

-- Store the results of the rewritten

-- query into a temporary table

WITH rewritten AS (

<rewritten, text, >

)

SELECT *

INTO #rewritten

FROM rewritten;

-- Add a sort column

ALTER TABLE #rewritten ADD [______sortcolumn] int identity(1,1);

-- Compare the results

SELECT 'original' AS source, *

FROM (

SELECT *

FROM #original

EXCEPT

SELECT *

FROM #rewritten

) AS A

UNION ALL

SELECT 'rewritten' AS source, *

FROM (

SELECT *

FROM #rewritten

EXCEPT

SELECT *

FROM #original

) AS B;

The script is a SSMS query template that takes the results of the original and the rewritten query and compares the resultsets, returning all the missing or different rows. The script uses two CTEs to wrap the two queries: this means that the ORDER BY predicate (if any) will have to be moved outside the CTE.

Also, the results of the two queries are piped to temporary tables, which means that you can’t have duplicate column names in the result set.

Another thing worth noting is that the statements to compare cannot be stored procedures. One simple way to overcome this limitation is to use the technique I described in this post.

The queries inside the CTEs should then be rewritten as:

SELECT * FROM OPENQUERY(LOOPBACK,'<original, text,>')

Obviously, all the quotes must be doubled, which is the reason why I didn’t set up the script this way in the first place. It’s annoying, but it’s the only way I know of to pipe the output of a stored procedure into a temporary table without knowing the resultset definition in advance. If you can do better, suggestions are always welcome.

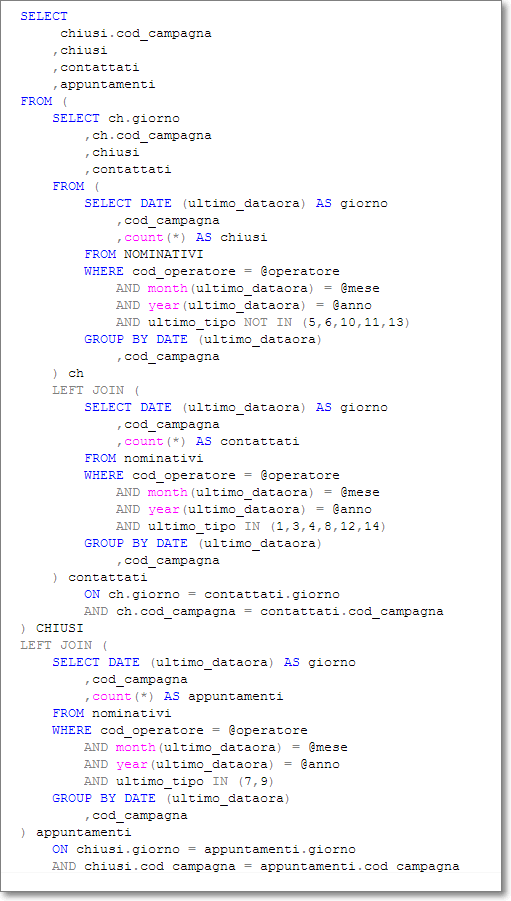

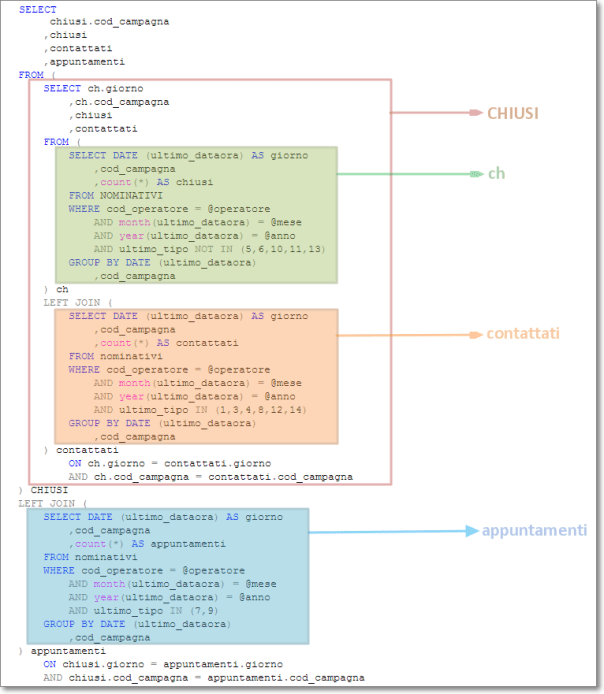

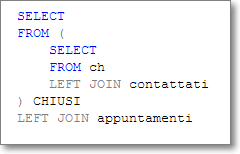

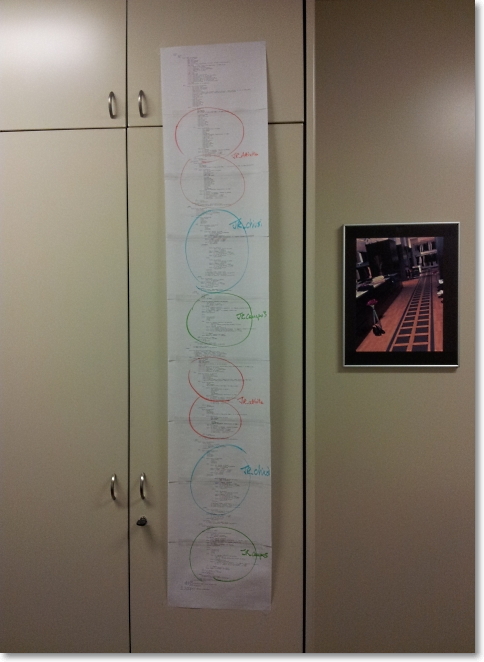

4. Identify the query parts

OK, now you have everything ready and you can start eating the elephant. The first thing to do is to identify all the autonomous blocks in the query and give them a name. You can do this at any granularity and repeat the task as many times as you like: the important thing is that at the end of this process you have a list of query parts and a name for each part.

Identify the main tables

Usually I like the idea that the data comes from one “main” table and all the rest comes from correlated tables. For instance, if I have to return a resultset containing some columns from the “SalesOrderHeader” table and some columns from the “SalesOrderDetail” table, I consider SalesOrderHeader the main table and SalesOrderHeader a correlated table. It fits well with my mindset, but you are free to see things the way you prefer.

Probably these tables are already identified by an alias: note down the aliases and move on.

Identify non correlated subqueries and UNIONs

Non-correlated subqueries are considered as inline views. Often these subqueries are joined to the main tables to enrich the resultset with additional columns.

Don’t be scared away by huge subqueries: you can always repeat all the steps for any single subquery and rewrite it to be more compact and readable.

Again, just note down the aliases and move to the next step.

Identify correlated subqueries

Correlated subqueries are not different from non-correlated subqueries, with the exception that you will have less freedom to move them from their current position in the query. However, that difference doesn’t matter for the moment: give them a name and note it down.

5. Write a query outline

Use the names you identified in the previous step and write a query outline. It won’t execute, but it gives you the big picture.

If you really want the big picture, print the query. It may seem crazy, but sometimes I find it useful to be able to see the query as a whole, with all the parts with their names highlighted in different colors.

Yes, that’s a single SELECT statement, printed in Courier new 8 pt. on 9 letter sheets, hanging on the wall in my office.

6. Break the statement in parts with CTEs, views, functions and temporary tables

SQL Server offers a fair amount of tools that allow breaking a single statement into parts:

- Common Table Expressions

- Subqueries

- Views

- Inline Table Valued Functions

- Multi-Statement Table Valued Functions

- Stored procedures

- Temporary Tables

- Table Variables

Ideally, you will choose the one that performs best in your scenario, but you could also take usability and modularity into account.

CTEs and subqueries are a good choice when the statement they contain is not used elsewhere and there is no need to reuse that code.

Table Valued functions and views, on the contrary, are most suitable when there is an actual need to incapsulate the code in modules to be reused in multiple places.

Generally speaking, you will use temporary tables or table variables when the subquery gets used more than once in the statement, thus reducing the load.

Though I would really like to go into deeper details on the performance pros and cons of each construct, that would take an insane amount of time and space. You can find a number of articles and blogs on those topics and I will refrain from echoing them here.

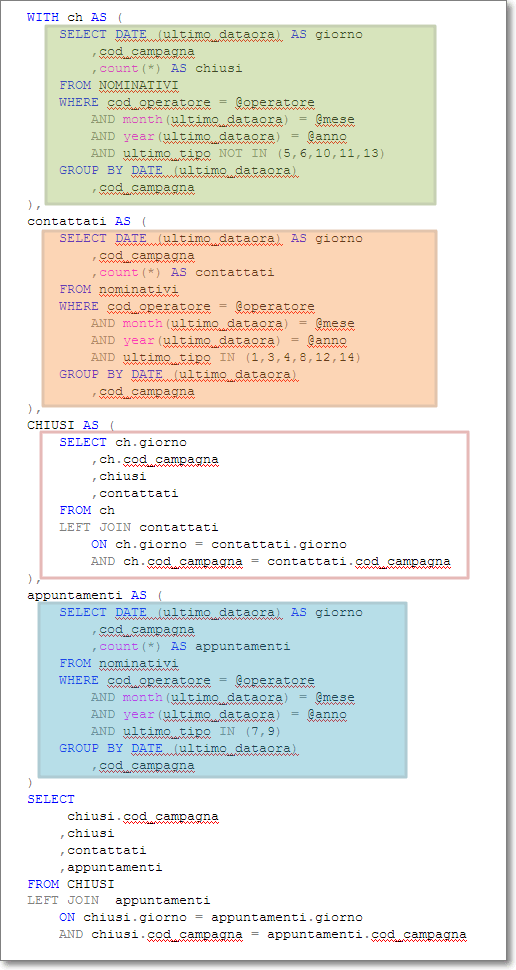

7. Merge redundant subqueries

Some parts of your query may be redundant and you may have the opportunity to merge those parts. The merged query will be more compact and will likely perform significantly better.

For instance, you could have multiple subqueries that perform aggregate calculations on the same row set:

SELECT ProductID

,Name

,AverageSellOutPrice = (

SELECT AVG(UnitPrice)

FROM Sales.SalesOrderDetail

WHERE ProductID = PR.ProductID

)

,MinimumSellOutPrice = (

SELECT MIN(UnitPrice)

FROM Sales.SalesOrderDetail

WHERE ProductID = PR.ProductID

)

,MaximumSellOutPrice = (

SELECT MAX(UnitPrice)

FROM Sales.SalesOrderDetail

WHERE ProductID = PR.ProductID

)

FROM Production.Product AS PR;

The above query can be rewritten easily to avoid hitting the SalesOrderDetail table multiple times:

SELECT ProductID

,Name

,AverageSellOutPrice

,MinimumSellOutPrice

,MaximumSellOutPrice

FROM Production.Product AS PR

CROSS APPLY (

SELECT AVG(UnitPrice), MIN(UnitPrice), MAX(UnitPrice)

FROM Sales.SalesOrderDetail

WHERE ProductID = PR.ProductID

) AS SellOuPrices (AverageSellOutPrice, MinimumSellOutPrice, MaximumSellOutPrice);

Another typical situation where you can merge some parts is when multiple subqueries perform counts on slightly different row sets:

SELECT ProductID

,Name

,OnlineOrders = (

SELECT COUNT(*)

FROM Sales.SalesOrderHeader AS SOH

WHERE SOH.OnlineOrderFlag = 1

AND EXISTS (

SELECT *

FROM Sales.SalesOrderDetail

WHERE SalesOrderID = SOH.SalesOrderID

AND ProductID = PR.ProductID

)

)

,OfflineOrders = (

SELECT COUNT(*)

FROM Sales.SalesOrderHeader AS SOH

WHERE SOH.OnlineOrderFlag = 0

AND EXISTS (

SELECT *

FROM Sales.SalesOrderDetail

WHERE SalesOrderID = SOH.SalesOrderID

AND ProductID = PR.ProductID

)

)

FROM Production.Product AS PR;

The only difference between the two subqueries is the predicate on SOH.OnlineOrderFlag. The two queries can be merged introducing a CASE expression in the aggregate:

SELECT ProductID

,Name

,ISNULL(OnlineOrders,0) AS OnlineOrders

,ISNULL(OfflineOrders,0) AS OfflineOrders

FROM Production.Product AS PR

CROSS APPLY (

SELECT SUM(CASE WHEN SOH.OnlineOrderFlag = 1 THEN 1 ELSE 0 END),

SUM(CASE WHEN SOH.OnlineOrderFlag = 0 THEN 1 ELSE 0 END)

FROM Sales.SalesOrderHeader AS SOH

WHERE EXISTS (

SELECT *

FROM Sales.SalesOrderDetail

WHERE SalesOrderID = SOH.SalesOrderID

AND ProductID = PR.ProductID

)

) AS Orderscount (OnlineOrders, OfflineOrders);

There are infinite possibilities and enumerating them all would be far beyond the scope of this post. This is one of the topics that my students often find hard to understand and I realize that it really takes some experience to identify merge opportunities and implement them.

8. Put it all together

Remember the query outline you wrote previously? It’s time to put it into action.

Some of the identifiers may have gone away in the merge process, some others are still there and have been transformed into different SQL constructs, such as CTEs, iTVFs or temporary tables.

9. Verify the output based on multiple different input values

Now it’s time to see if your new query works exactly like the original one. You already have a script for that: you can go on and use it.

Remember that the test can be considered meaningful only if you repeat it a reasonably large number of times, with different parameters. Some queries could appear to be identical, but still be semantically different. Make sure the rewritten version handles NULLs and out-of-range parameters in the same way.

10.Comment your work thoroughly

If you don’t comment your work, somebody will find it even more difficult to maintain than the elephant you found when you started.

Comments are for free and don’t affect the query performance in any way. Don’t add comments that mimic what the query does, instead, write a meaningful description of the output of the query.

For instance, given a code fragment like this:

SELECT SalesOrderID, OrderDate, ProductID

INTO #orders

FROM Sales.SalesOrderHeader AS H

INNER JOIN Sales.SalesOrderDetail AS D

ON H.SalesOrderID = D.SalesOrderID

WHERE OrderDate BETWEEN @StartDate AND @EndDate

a comment like “joins OrderHeader to OrderDetail” adds nothing to the clarity of the code. A comment like “Selects the orders placed between the @StartDate and @EndDate and saves the results in a temporary table for later use” would be a much better choice.

Elephant eaten. (Burp!)

After all, it was not too big, was it?

My first year in blogging

WordPress.com prepared a 2011 annual report for this blog and I want to share it with you.

Here’s an excerpt:

A San Francisco cable car holds 60 people. This blog was viewed about 2,900 times in 2011. If it were a cable car, it would take about 48 trips to carry that many people.

Click here to see the complete report.

This is my first year as a blogger and I’m very happy with it. I didn’t post very often (25 posts means that I could put together only 2 posts per month), but it was challenging and entertaining at the same time.

Blogging takes a hell lot of time. Moreover, my blog is written in English, which is a foreign language for me. Blogging on technical topics requires checking the code you post thoroughly. Finding something new to blog about is not always easy and I want to avoid writing about topics that are already covered in thousands of articles and blogs.

Posting on this blog is difficult and time consuming, but I enjoy it a lot. I hope I will be able to keep writing at this pace (or a better one) in 2012.

Just a few words on WordPress: it’s a great blogging platform, hosted in an outstanding manner at wordpress.com. If you want to start a blog, choosing the right platform today is a no-brainer. Thanks to all the folks at wordpress.com for the great work.

Mirrored Backups: a useful feature?

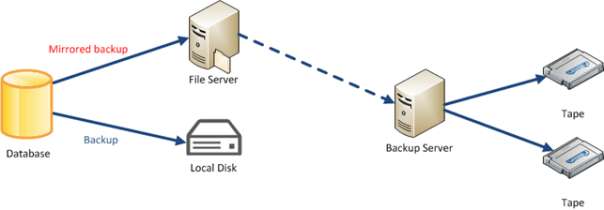

One of the features found in the Enterprise Edition of SQL Server is the ability to take mirrored backups. Basically, taking a mirrored backup means creating additional copies of the backup media (up to three) using a single BACKUP command, eliminating the need to perform the copies with copy or robocopy.

The idea behind is that you can backup to multiple locations and increase the protection level by having additional copies of the backup set. In case one of the copies gets lost or corrupted, you can use the mirrored copy to perform a restore.

BACKUP DATABASE [AdventureWorks2008R2] TO DISK = 'C:\backup\AdventureWorks2008R2.bak' MIRROR TO DISK = 'H:\backup\AdventureWorks2008R2.bak' WITH FORMAT; GO

Another possible scenario for a mirrored backup is deferred tape migration: you can backup to a local disk and mirror to a shared folder on a file server. That way you could have a local copy of the backup set and restore it in case of need and let the mirrored copy migrate to tape when the disk backup software processes the file server’s disks.

Mirrored backup sets can be combined with striped backups, given that all the mirror copies contain the same number of stripes:

BACKUP DATABASE [AdventureWorks2008R2] TO DISK = 'C:\backup\AdventureWorks2008R2_1.bak', DISK = 'C:\backup\AdventureWorks2008R2_2.bak', DISK = 'C:\backup\AdventureWorks2008R2_3.bak' MIRROR TO DISK = 'H:\AdventureWorks2008R2_1.bak', DISK = 'H:\AdventureWorks2008R2_2.bak', DISK = 'H:\AdventureWorks2008R2_3.bak' WITH FORMAT; GO

When restoring from a striped + mirrored backup set, you can mix the files from one media with the files from another media, as each mirrored copy is an exact copy of the main backup set.

RESTORE DATABASE [AW_Restore] FROM DISK = N'C:\backup\AdventureWorks2008R2_1.bak', -- main media DISK = N'H:\AdventureWorks2008R2_2.bak', -- mirror media DISK = N'H:\AdventureWorks2008R2_3.bak' -- mirror media WITH FILE = 1, MOVE N'AdventureWorks2008R2_Data' TO N'C:\DATA\AW_Restore.mdf', MOVE N'AdventureWorks2008R2_Log' TO N'C:\DATA\AW_Restore_1.ldf', MOVE N'FileStreamDocuments2008R2' TO N'C:\DATA\AW_Restore_2.Documents2008R2', NOUNLOAD, STATS = 10; GO

Looks like a handy feature! However, some limitations apply:

- If striped, the mirror must contain the same number of stripes.

Looks sensible: each mirror copy is an exact copy of the main backup set, which would be impossible with a different number of devices. - Must be used with FORMAT option.

No append supported: the destination device must be overwritten. - Destination media must be of the same type.

You cannot use disk and tape together. I can understand the reason for this restriction, but, actually, it makes this feature much less useful than it could be. - Fails the backup if ANY of the mirrored copies fails.

This is the main pain point: creating multiple copies of the same backup set can end up reducing the protection level, because the whole backup process fails when at least one of the destination media is unavailable or faulty.

Does this mean that the ability to take mirrored backups is a useless feature?

Well, it highly depends on your point of view and what matters to you most. I would prefer having at least one copy of the database backup available rather than no backup at all.

Keeping in mind that:

- the same exact result can be accomplished using copy, xcopy or robocopy

- non-local copies are much more likely to fail rather than local copies

- taking multiple local copies is quite pointless

- Enterprise Edition costs a lot of money

- There’s no GUI in SSMS backup dialog, nor in Maintenance Plans

…I think I could live without this feature. At least, this is not one of the countless reasons why I would prefer Enterprise over cheaper editions.

Setting up an e-mail alert for DBCC CHECKDB errors

Some months ago I posted a script on a SQLServerCentral forum to help a member automating the execution of DBCC CHECKDB and send and e-mail alert in case a consistency error is found.

The original thread can be found here.

I noticed that many people are actually using that script and I also got some useful feedback on the code itself, so I decided to write this post to make an enhanced version available to everyone.

The Problem

Your primary responsibility as a DBA is to safeguard your data with backups. I mean intact backups! Keep in mind that when you back up a corrupt database, you will also restore a corrupt database.

A task that checks the database integrity should be part of your backup strategy and you should be notified immediately when corruption is found.

Unfortunately, the built-in consistency check Maintenance Task does not provide an alerting feature and you have to code it yourself.

The Solution

SQL Server 2000 and above accept the “WITH TABLERESULTS” option for most DBCC commands to output the messages as a result set. Those results can be saved to a table and processed to identify messages generated by corrupt data and raise an alert.

If you don’t know how to discover the resultset definition of DBCC CHECKDB WITH TABLERESULTS, I suggest that you take a look at this post.

Here is the complete code of the stored procedure I am using on my production databases:

| -- https://spaghettidba.com/2011/11/28/email-alert-dbcc-checkdb/ | |

| -- You have a TOOLS database, don't you? | |

| -- If not, create it: you'll thank me later. | |

| USE TOOLS; | |

| GO | |

| IF NOT EXISTS( SELECT 1 FROM sys.schemas WHERE name = 'maint') | |

| EXEC('CREATE SCHEMA maint'); | |

| GO | |

| -- ============================================= | |

| -- Author: Gianluca Sartori - spaghettidba | |

| -- Create date: 2011-06-30 | |

| -- Description: Runs DBCC CHECKDB on the database(s) specified | |

| -- and returns a table result that can be used in | |

| -- reporting and alerting. | |

| -- ============================================= | |

| CREATE PROCEDURE [maint].[dba_runCHECKDB] | |

| @dbName nvarchar(max) = NULL, -- Database name. If NULL, will check all databases | |

| @PHYSICAL_ONLY bit = 0, -- Set to 1 to perform physical check only. Defaults to 0. | |

| @allMessages bit = 0, -- Set to 1 to return all the messages generated by DBCC | |

| -- Set to 0 to return one summary message for each database (default) | |

| @dbmail_profile sysname = NULL, -- DBMail profile to use when sending the results | |

| @dbmail_recipient sysname = NULL, -- DBMail recipient | |

| @log_to_table bit = 0, -- Set to 1 to enable logging to table DBCC_CHECKDB_HISTORY | |

| @help bit = 0 | |

| AS | |

| BEGIN | |

| SET NOCOUNT, | |

| XACT_ABORT, | |

| QUOTED_IDENTIFIER, | |

| ANSI_NULLS, | |

| ANSI_PADDING, | |

| ANSI_WARNINGS, | |

| ARITHABORT, | |

| CONCAT_NULL_YIELDS_NULL ON; | |

| SET NUMERIC_ROUNDABORT OFF; | |

| IF @help = 1 | |

| BEGIN | |

| SELECT * | |

| FROM ( | |

| SELECT '@dbname nvarchar(max) = NULL','Database name.' | |

| UNION ALL SELECT '','''Database'' checks a single database' | |

| UNION ALL SELECT '','NULL checks all databases' | |

| UNION ALL SELECT '','''Database1,Database2,...DatabaseN'' checks the databases in the list' | |

| UNION ALL SELECT '','''^Database1,^Database2,...^DatabaseN'' checks all databases except the ones in the list' | |

| UNION ALL SELECT '','Combinations of positive (not prefixed with ^) and negative (prefixed with ^) elements' | |

| UNION ALL SELECT '','considers positive elements only. Use negative elements alone.' | |

| UNION ALL SELECT '','' | |

| UNION ALL SELECT '@PHYSICAL_ONLY bit = 0','If set to 1, performs CHECKDB WITH PHYSICAL_ONLY' | |

| UNION ALL SELECT '','' | |

| UNION ALL SELECT '@allMessages bit = 0','If set to 1, returns all the messages generated by DBCC' | |

| UNION ALL SELECT '','If set to 0, returns a summary message for each database' | |

| UNION ALL SELECT '','' | |

| UNION ALL SELECT '@dbmail_profile sysname = NULL','The dbmail profile to use to send out the email.' | |

| UNION ALL SELECT '','NULL uses the global dbmail profile' | |

| UNION ALL SELECT '','' | |

| UNION ALL SELECT '@dbmail_recipient sysname = NULL','The address that will receive the email.' | |

| UNION ALL SELECT '','If set to NULL disables sending the email and returns a result set.' | |

| UNION ALL SELECT '','If not NULL sends the email without returning a result set' | |

| UNION ALL SELECT '','' | |

| UNION ALL SELECT '@log_to_table bit = 0','If set to 1, stores the results in the table DBCC_CHECKDB_HISTORY.' | |

| UNION ALL SELECT '','If the table does not exists, it will create the table for you.' | |

| UNION ALL SELECT '','When logging to a table, a result set is not returned.' | |

| UNION ALL SELECT '','' | |

| UNION ALL SELECT '@help bit = 0','If set to 1 displays this help.' | |

| ) AS h ( | |

| [-----parameter------------------------------------------------------] | |

| ,[-----description----------------------------------------------------------------------------------------------------------------] | |

| ) | |

| RETURN | |

| END | |

| DECLARE @version int | |

| DECLARE @sql nvarchar(4000) | |

| DECLARE @ErrorMessage nvarchar(2048) | |

| DECLARE @body nvarchar(max) | |

| DECLARE @Message nvarchar(4000) | |

| DECLARE @Severity int | |

| DECLARE @State int | |

| -- determine major version: DBCC output can be different | |

| SELECT @version = CAST(REPLACE(CAST(SERVERPROPERTY('ProductVersion') AS char(2)),'.','') AS int) | |

| -- Clean up the @dbname parameter | |

| -- Remove leading/trailing spaces | |

| SET @dbname = LTRIM(RTRIM(@dbname)) | |

| -- Remove leading commas | |

| WHILE LEFT(@dbname,1) = ',' | |

| SET @dbname = SUBSTRING(@dbname, 2, LEN(@dbname)) | |

| -- Remove trailing commas | |

| WHILE RIGHT(@dbname,1) = ',' | |

| SET @dbname = SUBSTRING(@dbname, 1, LEN(@dbname)-1) | |

| IF OBJECT_ID('tempdb..##DBCC_OUTPUT') IS NOT NULL | |

| DROP TABLE ##DBCC_OUTPUT | |

| -- SQL2005, SQL2008, SQL2008R2 produce this output | |

| -- except for the columns explicitly marked as new in SQL 2012 | |

| CREATE TABLE ##DBCC_OUTPUT( | |

| Error int NULL, | |

| [Level] int NULL, | |

| State int NULL, | |

| MessageText nvarchar(2048) NULL, | |

| RepairLevel nvarchar(22) NULL, | |

| Status int NULL, | |

| DbId int NULL, -- was smallint in SQL2005 | |

| DbFragId int NULL, -- new in SQL2012 | |

| ObjectId int NULL, | |

| IndexId int NULL, | |

| PartitionId bigint NULL, | |

| AllocUnitId bigint NULL, | |

| RidDbId smallint NULL, -- new in SQL2012 | |

| RidPruId smallint NULL, -- new in SQL2012 | |

| [File] smallint NULL, | |

| Page int NULL, | |

| Slot int NULL, | |

| RefDbId smallint NULL, -- new in SQL2012 | |

| RefPruId smallint NULL, -- new in SQL2012 | |

| RefFile smallint NULL, | |

| RefPage int NULL, | |

| RefSlot int NULL, | |

| Allocation smallint NULL | |

| ) | |

| -- Add a computed column | |

| ALTER TABLE ##DBCC_OUTPUT ADD Outcome AS | |

| CASE | |

| WHEN Error = 8989 AND MessageText LIKE '%0 allocation errors and 0 consistency errors%' THEN 0 | |

| WHEN Error <> 8989 THEN NULL | |

| ELSE 1 | |

| END | |

| -- Add an identity column to sort results when sending the email | |

| ALTER TABLE ##DBCC_OUTPUT ADD RowId int IDENTITY(1,1) | |

| DECLARE @localTran bit | |

| IF @@TRANCOUNT = 0 | |

| BEGIN | |

| SET @localTran = 1 | |

| BEGIN TRANSACTION LocalTran | |

| END | |

| BEGIN TRY | |

| -- Create the history table if needed | |

| IF @log_to_table = 1 | |

| AND OBJECT_ID('maint.DBCC_CHECKDB_HISTORY') IS NULL | |

| BEGIN | |

| SELECT TOP(0) | |

| RowId, | |

| Error, | |

| [Level], | |

| State, | |

| MessageText, | |

| RepairLevel, | |

| Status, | |

| DbId, | |

| DbFragId, | |

| CAST(NULL AS sysname) AS DatabaseName, | |

| ObjectId, | |

| IndexId, | |

| PartitionId, | |

| AllocUnitId, | |

| RidDbId, | |

| RidPruId, | |

| [File], | |

| Page, | |

| Slot, | |

| RefDbId, | |

| RefPruId, | |

| RefFile, | |

| RefPage, | |

| RefSlot, | |

| Allocation, | |

| Outcome, | |

| GETDATE() AS RunDate | |

| INTO maint.DBCC_CHECKDB_HISTORY | |

| FROM ##DBCC_OUTPUT | |

| ALTER TABLE maint.DBCC_CHECKDB_HISTORY | |

| ADD CONSTRAINT PK_DBCC_CHECKDB_HISTORY PRIMARY KEY CLUSTERED(RowId) | |

| END | |

| -- Open a cursor on the matching databases (version dependant) | |

| DECLARE c_databases CURSOR LOCAL FAST_FORWARD | |

| FOR | |

| SELECT QUOTENAME(name) AS name | |

| FROM master.sys.databases | |

| WHERE ( | |

| @dbname IS NULL | |

| OR ',' + @dbname + ',' NOT LIKE '%,[^!^]%' ESCAPE '!' | |

| OR ',' + @dbname + ',' LIKE '%,' + name + ',%' | |

| OR ',' + @dbname + ',' LIKE '%,' + REPLACE(QUOTENAME(name),'[','[[]') + ',%' | |

| ) | |

| AND ',' + ISNULL(@dbname,'') + ',' NOT LIKE '%,!^' + name + ',%' ESCAPE '!' | |

| AND ',' + ISNULL(@dbname,'') + ',' NOT LIKE '%,!^' + REPLACE(QUOTENAME(name),'[','[[]') + ',%' ESCAPE '!' | |

| AND state_desc = 'ONLINE' | |

| AND is_read_only = 0 | |

| AND source_database_id IS NULL -- Exclude Snapshots | |

| AND name <> 'tempdb' | |

| OPEN c_databases | |

| FETCH NEXT FROM c_databases INTO @dbName | |

| WHILE @@FETCH_STATUS = 0 | |

| BEGIN | |

| -- Build a SQL string | |

| SET @sql = 'DBCC CHECKDB('+ @dbName +') WITH TABLERESULTS, ALL_ERRORMSGS ' | |

| IF @PHYSICAL_ONLY = 1 | |

| SET @sql = @sql + ', PHYSICAL_ONLY ' | |

| BEGIN TRY | |

| IF @version > 8 AND @version < 11 | |

| BEGIN | |

| -- SQL2005/2008 use this column list | |

| INSERT INTO ##DBCC_OUTPUT ( | |

| Error, | |

| [Level], | |

| State, | |

| MessageText, | |

| RepairLevel, | |

| Status, | |

| DbId, | |

| ObjectId, | |

| IndexId, | |

| PartitionId, | |

| AllocUnitId, | |

| [File], | |

| Page, | |

| Slot, | |

| RefFile, | |

| RefPage, | |

| RefSlot, | |

| Allocation | |

| ) | |

| EXEC(@sql) | |

| END | |

| IF @version >= 11 | |

| BEGIN | |

| -- SQL2012 uses all columns | |

| INSERT INTO ##DBCC_OUTPUT ( | |

| Error, | |

| [Level], | |

| State, | |

| MessageText, | |

| RepairLevel, | |

| Status, | |

| DbId, | |

| DbFragId, | |

| ObjectId, | |

| IndexId, | |

| PartitionId, | |

| AllocUnitId, | |

| RidDbId, | |

| RidPruId, | |

| [File], | |

| Page, | |

| Slot, | |

| RefDbId, | |

| RefPruId, | |

| RefFile, | |

| RefPage, | |

| RefSlot, | |

| Allocation | |

| ) | |

| EXEC(@sql) | |

| END | |

| END TRY | |

| BEGIN CATCH | |

| SELECT @ErrorMessage = 'Unable to run DBCC on database ' + @dbName + ': ' + ERROR_MESSAGE() | |

| INSERT INTO ##DBCC_OUTPUT (Error, MessageText) | |

| SELECT Error = 8989, | |

| MessageText = @ErrorMessage | |

| END CATCH | |

| FETCH NEXT FROM c_databases INTO @dbName | |

| END | |

| CLOSE c_databases | |

| DEALLOCATE c_databases | |

| IF NOT EXISTS ( | |

| SELECT 1 FROM ##DBCC_OUTPUT | |

| ) | |

| BEGIN | |

| RAISERROR('No database matches the name specified.',10,1) | |

| END | |

| IF @log_to_table = 1 | |

| BEGIN | |

| INSERT INTO maint.DBCC_CHECKDB_HISTORY ( | |

| Error, | |

| [Level], | |

| State, | |

| MessageText, | |

| RepairLevel, | |

| Status, | |

| DbId, | |

| DbFragId, | |

| DatabaseName, | |

| ObjectId, | |

| IndexId, | |

| PartitionId, | |

| AllocUnitId, | |

| RidDbId, | |

| RidPruId, | |

| [File], | |

| Page, | |

| Slot, | |

| RefDbId, | |

| RefPruId, | |

| RefFile, | |

| RefPage, | |

| RefSlot, | |

| Allocation, | |

| Outcome, | |

| RunDate | |

| ) | |

| SELECT | |

| Error, | |

| [Level], | |

| State, | |

| MessageText, | |

| RepairLevel, | |

| Status, | |

| DbId, | |

| DbFragId, | |

| DatabaseName = ISNULL(DB_NAME(DbId),'resourcedb'), | |

| ObjectId, | |

| IndexId, | |

| PartitionId, | |

| AllocUnitId, | |

| RidDbId, | |

| RidPruId, | |

| [File], | |

| Page, | |

| Slot, | |

| RefDbId, | |

| RefPruId, | |

| RefFile, | |

| RefPage, | |

| RefSlot, | |

| Allocation, | |

| Outcome, | |

| RunDate = GETDATE() | |

| FROM ##DBCC_OUTPUT | |

| WHERE Error = 8989 | |

| OR @allMessages = 1 | |

| OR DbId IN ( | |

| SELECT DbId | |

| FROM ##DBCC_OUTPUT | |

| WHERE Error = 8989 | |

| AND Outcome = 1 | |

| ) | |

| END | |

| -- Build the final SQL statement | |

| SET @sql = | |

| 'SELECT ISNULL(DB_NAME(DbId),''resourcedb'') AS DatabaseName, ' + | |

| CASE @allMessages | |

| WHEN 1 THEN '*' | |

| ELSE 'MessageText, Outcome' | |

| END + ' | |

| FROM ##DBCC_OUTPUT | |

| WHERE 1 = 1 ' + | |

| CASE @allMessages WHEN 1 THEN '' ELSE 'AND Error = 8989' END | |

| IF @dbmail_recipient IS NULL | |

| BEGIN | |

| -- Query DBCC output directly | |

| EXEC(@sql) | |

| END | |

| ELSE | |

| BEGIN | |

| -- Pipe DBCC output to a variable | |

| SET @sql = ' | |

| SELECT @body = ( | |

| SELECT ISNULL(MessageText,'''') + char(10) AS [text()] | |

| FROM ( ' + @sql + ' AND Error = 8989 ) AS src | |

| WHERE Outcome = 1 | |

| ORDER BY 1 DESC | |

| FOR XML PATH('''') | |

| )' | |

| EXEC sp_executesql @sql, N'@body nvarchar(max) OUTPUT', @body OUTPUT | |

| -- Send CHECKDB report | |

| IF @body IS NOT NULL | |

| BEGIN | |

| EXEC msdb.dbo.sp_send_dbmail | |

| @profile_name = @dbmail_profile, | |

| @recipients = @dbmail_recipient, | |

| @subject = 'Consistency error found!', | |

| @body = @body, | |

| @importance = 'High', | |

| @query = ' | |

| SET NOCOUNT ON; | |

| SELECT TOP(5000) ISNULL(DB_NAME(DbId),''resourcedb'') + '' -'' AS DatabaseName, MessageText | |

| FROM ##DBCC_OUTPUT WITH (NOLOCK) | |

| WHERE DbId IN ( | |

| SELECT DbId | |

| FROM ##DBCC_OUTPUT WITH (NOLOCK) | |

| WHERE Error = 8989 | |

| AND Outcome = 1 | |

| ) | |

| ORDER BY RowId ASC ', | |

| @attach_query_result_as_file = 1, | |

| @query_result_no_padding = 1, | |

| @query_result_header = 0, | |

| @exclude_query_output = 1, | |

| @query_attachment_filename = 'DBCC_CHECKDB_Errors.log' | |

| END | |

| END | |

| IF @localTran = 1 AND XACT_STATE() = 1 | |

| COMMIT TRAN LocalTran | |

| IF OBJECT_ID('tempdb..##DBCC_OUTPUT') IS NOT NULL | |

| DROP TABLE ##DBCC_OUTPUT | |

| END TRY | |

| BEGIN CATCH | |

| SELECT @Message = ERROR_MESSAGE(), | |

| @Severity = ERROR_SEVERITY(), | |

| @State = ERROR_STATE() | |

| IF @localTran = 1 AND XACT_STATE() <> 0 | |

| ROLLBACK TRAN | |

| RAISERROR ( @Message, @Severity, @State) | |

| END CATCH | |

| END |

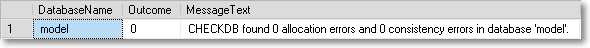

Once the stored procedure is ready, you can run it against the desired databases:

EXEC [maint].[dba_runCHECKDB] @dbName = 'model', @PHYSICAL_ONLY = 0, @allmessages = 0

Setting up an e-mail alert

In order to receive an e-mail alert, you can use a SQL Agent job and schedule this script to run every night, or whenever you find appropriate.

EXEC [maint].[dba_runCHECKDB]

@dbName = NULL,

@PHYSICAL_ONLY = 0,

@allmessages = 0,

@dbmail_profile = 'DBA_profile',

@dbmail_recipient = 'dba@mycompany.com'

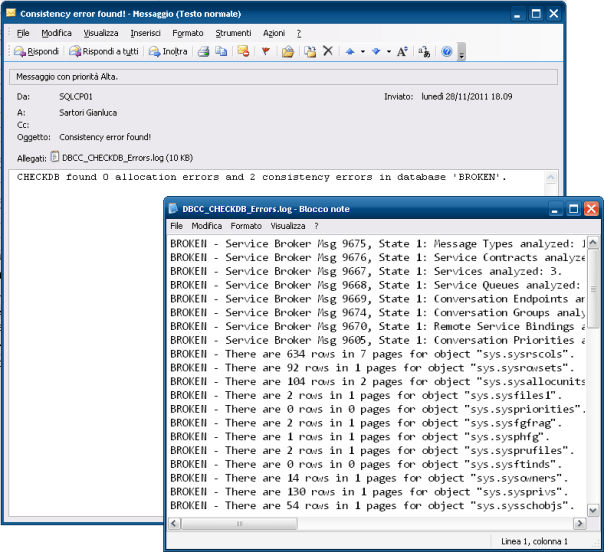

The e-mail message generated by the stored procedure contains the summary outcome and a detailed log, attached as a text file:

Logging to a table

If needed, you can save the output of this procedure to a history table that logs the outcome of DBCC CHECKDB in time:

-- Run the stored procedure with @log_to_table = 1

EXEC TOOLS.maint.dba_runCHECKDB

@dbName = NULL,

@PHYSICAL_ONLY = 0,

@allMessages = 0,

@log_to_table = 1

-- Query the latest results

SELECT *

FROM (

SELECT *, RN = ROW_NUMBER() OVER (PARTITION BY DBId ORDER BY RunDate DESC)

FROM DBCC_CHECKDB_HISTORY

WHERE Outcome IS NOT NULL

) AS dbcc_history

WHERE RN = 1

When invoked with the @log_to_table parameter for the first time, the procedure creates a log table that will be used to store the results. Subsequent executions will append to the table.

No excuses!

The web is full of blogs, articles and forums on how to automate DBCC CHECKDB. If your data has any value to you, CHECKDB must be part of your maintenance strategy.

Run! Check the last time you performed a successful CHECKDB on your databases NOW! Was it last year? You may be in big trouble.

Discovering resultset definition of DBCC commands

Lots of blog posts and discussion threads suggest piping the output of DBCC commands to a table for further processing. That’s a great idea, but, unfortunately, an irritatingly high number of those posts contains an inaccurate table definition for the command output.

The reason behind this widespread inaccuracy is twofold.

On one hand the output of many DBCC commands changed over time and versions of SQL Server, and a table that was the perfect fit for the command in SQL Server 2000 is not perfect any more. In this case, the blog/article/thread is simply old, but many people will keep referring to that source assuming that things did not change.

On the other hand, the output is not always documented in BOL, and people often have to guess the table definition based on the data returned by the command. I’ve been guilty of this myself and I’ve been corrected many times, until I decided that I needed a better way to discover the output definition.

You are a database professional and you don’t like to guess, because guessing is never as good as knowing it for sure.

In order to stop guessing, you will have to create a linked server named “loopback” that points back to the same instance where you are running the DBCC command.

I am sure you are asking yourself why you need such a strange thing as a loopback linked server. The idea behind is that you need a way to query the command as if it was a table or a view, so that it can be used as a valid source for a SELECT…INTO statement. The perfect tool for this kind of task is the OPENQUERY command, which allows sending pass-through queries, that don’t necessarily need to be SELECT statements. OPENQUERY requires a linked server, which can be any OLEDB data source, including a remote server or the same SQL Server instance where the linked server lies.

OK, let’s create it:

DECLARE @srv nvarchar(4000); SET @srv = @@SERVERNAME; -- gather this server name -- Create the linked server EXEC master.dbo.sp_addlinkedserver @server = N'LOOPBACK', @srvproduct = N'SQLServ', -- it’s not a typo: it can’t be “SQLServer” @provider = N'SQLNCLI', -- change to SQLOLEDB for SQLServer 2000 @datasrc = @srv; -- Set the authentication to "current security context" EXEC master.dbo.sp_addlinkedsrvlogin @rmtsrvname = N'LOOPBACK', @useself = N'True', @locallogin = NULL, @rmtuser = NULL, @rmtpassword = NULL;

In order to capture the output of DBCC commands, you have to wrap them inside a stored procedure, otherwise SQL Server could complain about missing column information. I don’t know the exact technical reason behind this error (I suppose it has to do with the way metadata is propagated), but this limitation can be overcome wrapping the command into a stored procedure and using “SET FMTONLY OFF” in the pass-through query.

This is also a nice way to overcome the single INSERT…EXEC limit (and implement many more interesting tricks that I hope to cover in future posts).

For instance, to capture the table definition of DBCC LOGINFO(), you will have to create a stored procedure similar to this:

USE tempdb;

GO

CREATE PROCEDURE loginfo

AS

BEGIN

SET NOCOUNT ON;

DBCC LOGINFO();

END

GO

With the stored procedure and the linked server in place, you can set up the call using OPENQUERY:

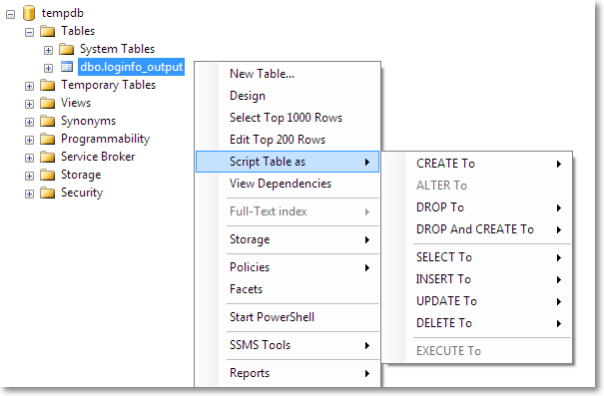

SELECT * INTO tempdb.dbo.loginfo_output FROM OPENQUERY(LOOPBACK, 'SET FMTONLY OFF; EXEC tempdb.dbo.loginfo'); DROP PROCEDURE loginfo; GO

Running this script will create a table named “loginfo_output” in the tempdb database: you can find it in your object explorer and script it out to a new query editor window.

Repeating these steps on instances running different versions on SQL Server reveals that the table definition changed in SQL2005 and then remained the same in 2008 and 2008R2.

-- SQL Server 2000

CREATE TABLE [dbo].[loginfo_output](

[FileId] [int] NULL,

[FileSize] [numeric](20, 0) NULL,

[StartOffset] [numeric](20, 0) NULL,

[FSeqNo] [int] NULL,

[Status] [int] NULL,

[Parity] [tinyint] NULL,

[CreateLSN] [numeric](25, 0) NULL

)

-- SQL Server 2005, 2008 and 2008R2

CREATE TABLE [dbo].[loginfo_output](

[FileId] [int] NULL,

[FileSize] [bigint] NULL,

[StartOffset] [bigint] NULL,

[FSeqNo] [int] NULL,

[Status] [int] NULL,

[Parity] [tinyint] NULL,

[CreateLSN] [numeric](25, 0) NULL

)

Now that you know how the output looks like, you can happily pipe the results of DBCC LOGINFO to an appropriate table:

-- Declare variable for dynamic sql

DECLARE @sql nvarchar(max)

-- Drop the table if already exists

IF OBJECT_ID('tempdb..loginfo_output') IS NOT NULL

DROP TABLE tempdb..loginfo_output

-- Check SQL Server version

IF CAST(REPLACE(LEFT(CAST(SERVERPROPERTY('ProductVersion') AS nvarchar(128)),2),'.','') AS int) > 8

BEGIN

-- SQL Server 2005+

SET @sql = '

CREATE TABLE tempdb..loginfo_output(

[FileId] [int] NULL,

[FileSize] [bigint] NULL,

[StartOffset] [bigint] NULL,

[FSeqNo] [int] NULL,

[Status] [int] NULL,

[Parity] [tinyint] NULL,

[CreateLSN] [numeric](25, 0) NULL

)

'

END

ELSE

BEGIN

-- SQL Server 2000

SET @sql = '

CREATE TABLE tempdb..loginfo_output(

[FileId] [int] NULL,

[FileSize] [numeric](20, 0) NULL,

[StartOffset] [numeric](20, 0) NULL,

[FSeqNo] [int] NULL,

[Status] [int] NULL,

[Parity] [tinyint] NULL,

[CreateLSN] [numeric](25, 0) NULL

)

'

END

-- Create the output table

EXEC(@sql)

-- Execute DBCC command and

-- pipe results to the output table

INSERT tempdb..loginfo_output

EXEC('DBCC LOGINFO()')

-- Display results

SELECT *

FROM tempdb..loginfo_output

You could ask with good reason why you should use an output table when you could query the wrapper stored procedure directly with OPENQUERY. Based on observation, the trick does not always work and SQL Server can randomly complain about missing column information.

Msg 7357, Level 16, State 2, Line 2 Cannot process the object "loginfo". The OLE DB provider "SQLNCLI10" for linked server "LOOPBACK" indicates that either the object has no columns or the current user does not have permissions on that object.

Again, I don’t have an in-depth technical answer: I can only report what I observed. It’s not a big deal indeed, because the output definition changes very slowly (typically between SQL Server versions) and you probably would review your code anyway when upgrading to a newer version. I guess you can live with a hardcoded table definition when the price to pay for having it dynamic is a random failure.

This post showed you how to capture the output of DBCC LOGINFO, but the same technique can be used for all DBCC commands that allow specifying WITH TABLERESULTS, extended stored procedures, remote stored procedures and all those programmable objects than cannot be inspected easily.

Now that you have the right tool in your hands, do yourself a favour: stop guessing!

Concatenating multiple columns across rows

Today I ran into an interesting question on the forums at SQLServerCentral and I decided to share the solution I provided, because it was fun to code and, hopefully, useful for some of you.

Many experienced T-SQL coders make use of FOR XML PATH(‘’) to build concatenated strings from multiple rows. It’s a nice technique and pretty simple to use.

For instance, if you want to create a list of databases in a single concatenated string, you can run this statement:

SELECT CAST((

SELECT name + ',' AS [text()]

FROM sys.databases

ORDER BY name

FOR XML PATH('')

) AS varchar(max))

The SELECT statement produces this result:

allDBs ------------------------------------------------------------------ BROKEN,LightHouse,master,model,msdb,tempdb,TEST,test80,TOOLS,WORK,

Great! But, what if you had to concatenate multiple columns at the same time? It’s an unusual requirement, but not an impossible one.

Let’s consider this example:

-- =================================

-- Create a sentences table

-- =================================

DECLARE @Sentences TABLE (

sentence_id int PRIMARY KEY CLUSTERED,

sentence_description varchar(50)

)

-- =================================

-- Sentences are broken into rows

-- =================================

DECLARE @Rows TABLE (

sentence_id int,

row_id int,

Latin varchar(500),

English varchar(500),

Italian varchar(500)

)

-- =================================

-- Create three sentences

-- =================================

INSERT INTO @Sentences VALUES(1,'First sentence.')

INSERT INTO @Sentences VALUES(2,'Second Sentence')

INSERT INTO @Sentences VALUES(3,'Third sentence')

-- =================================

-- Create sentences rows from

-- "De Finibus bonorum et malorum"

-- by Cicero, AKA "Lorem Ipsum"

-- =================================

INSERT INTO @Rows VALUES(1, 1,

'Neque porro quisquam est,',

'Nor again is there anyone who',

'Viceversa non vi è nessuno che ama,')

INSERT INTO @Rows VALUES(1, 2,

'qui dolorem ipsum quia dolor sit amet,',

'loves or pursues or desires to obtain pain',

'insegue, vuol raggiungere il dolore in sé')

INSERT INTO @Rows VALUES(1, 3,

'consectetur, adipisci velit, sed quia non numquam',

'of itself, because it is pain, but because occasionally',

'perché è dolore ma perché talvolta')

INSERT INTO @Rows VALUES(1, 3,

'eius modi tempora incidunt',

'circumstances occur in which',

'capitano circostanze tali per cui')

INSERT INTO @Rows VALUES(1, 3,

'ut labore et dolore magnam aliquam quaerat voluptatem.',

'toil and pain can procure him some great pleasure.',

'con il travaglio e il dolore si cerca qualche grande piacere.')

INSERT INTO @Rows VALUES(2, 1,

'Ut enim ad minima veniam,',

'To take a trivial example,',

'Per venire a casi di minima importanza,')

INSERT INTO @Rows VALUES(2, 2,

'quis nostrum exercitationem ullam corporis suscipit laboriosam,',

'which of us ever undertakes laborious physical exercise,',

'chi di noi intraprende un esercizio fisico faticoso')

INSERT INTO @Rows VALUES(2, 3,

'nisi ut aliquid ex ea commodi consequatur?',

'except to obtain some advantage from it?',

'se non per ottenere da esso qualche vantaggio?')

INSERT INTO @Rows VALUES(3, 1,

'Quis autem vel eum iure reprehenderit qui in ea voluptate',

'But who has any right to find fault with a man who chooses to enjoy a pleasure',

'O chi può biasimare colui che decide di provare un piacere')

INSERT INTO @Rows VALUES(3, 2,

'velit esse quam nihil molestiae consequatur,',

'that has no annoying consequences,',

'che non porta conseguenze negative,')

INSERT INTO @Rows VALUES(3, 3,

'vel illum qui dolorem eum fugiat quo voluptas nulla pariatur?',

'or one who avoids a pain that produces no resultant pleasure?',

'o che fugge quel dolore che non produce nessun piacere?')

The setup code creates two tables: Sentences and Rows. The first one is the master table, that contains the sentence_id and a description. The second one contains the actual sentences, broken into rows and organized with languages in columns.

For the purposes of this test, I inserted in the Rows table an excerpt of Cicero’s “De Finibus bonorum et malorum”, also known as “Lorem Ipsum”, the printing and typesetting industry’s standard dummy text since the 1500s.

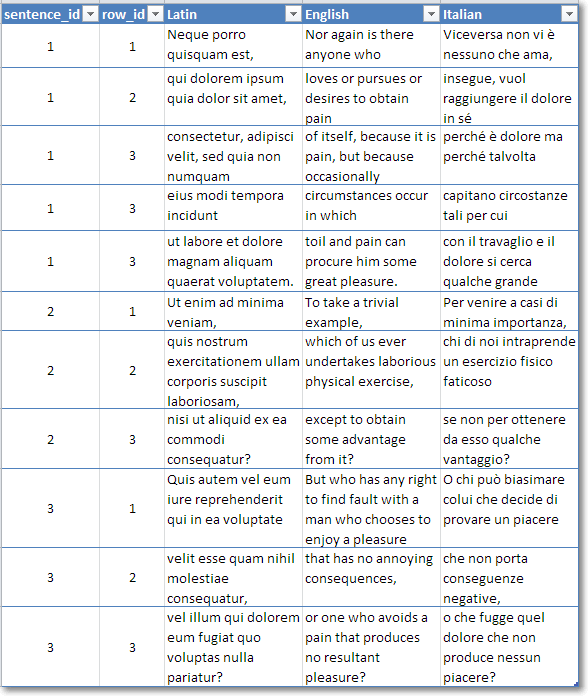

Here’s how the input data looks like:

What we want to do is concatenate all the rows for each sentence, keeping the languages separated. It could be accomplished very easily concatenating each column separately in a subquery, but what if the input data comes from a rather expensive query? You don’t want to run the statement for each language, do you?

Let’s see how this can be done in a single scan:

SELECT sentence_id, sentence_description, Latin, English, Italian

FROM (

SELECT Sentences.sentence_id, sentence_description, language_name, string

FROM @Sentences AS Sentences

OUTER APPLY (

SELECT *

FROM (

-- =================================

-- Create a Languages inline query

-- =================================

SELECT 'Latin'

UNION ALL SELECT 'English'

UNION ALL SELECT 'Italian'

) Languages (language_name)

CROSS APPLY (

-- =================================

-- Concatenate all the rows for

-- the current sentence and language

-- from an UNPIVOTed version of the

-- original rows table

-- =================================

SELECT sentence_id, string = (

SELECT string + ' ' AS [data()]

FROM @Rows AS src

UNPIVOT ( string FOR language_name IN (Latin, English, Italian) ) AS u

WHERE sentence_id = Sentences.sentence_id

AND language_name = Languages.language_name

ORDER BY row_id

FOR XML PATH('')

)

) AS ca

) AS oa

) AS src

-- =================================

-- Re-transform rows to columns

-- =================================

PIVOT ( MIN(string) FOR language_name IN ([Latin],[English],[Italian])) AS p

If you don’t like PIVOT and UNPIVOT, you can always use CASE expressions to create a crosstab.

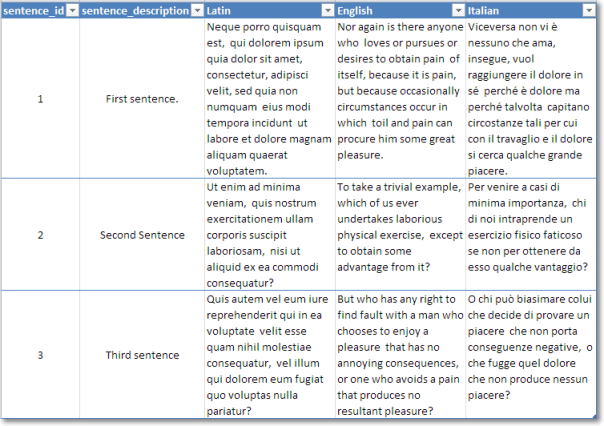

Here’s the final result:

With a little of PIVOT, UNPIVOT and FOR XML you can achieve really surprising results, you just need to unleash your creativity.

Trace Flag 3659

Many setup scripts for SQL Server include the 3659 trace flag, but I could not find official documentation that explains exactly what this flag means.

After a lot of research, I found a reference to this flag in a script called AddSelfToSqlSysadmin, written by Ward Beattie, a developer in the SQL Server product group at Microsoft.

The script contains a line which suggests that this flag enables logging all errors to errorlog during server startup. I’m unsure of what kind of errors are not logged without setting the flag, but I didn’t find any further reference. The BOL page for Trace Flags doesn’t list this one, so if you happen to know something more, feel free to add a comment here.