Category Archives: SQL Server

Do you need sysadmin rights to backup a database?

Looks like a silly question, doesn’t it? – Well, you would be surprised to know it’s not.

Looks like a silly question, doesn’t it? – Well, you would be surprised to know it’s not.

Obviously, you don’t need to be a sysadmin to simply issue a BACKUP statement. If you look up the BACKUP statement on BOL you’ll see in the “Security” section that

BACKUP DATABASE and BACKUP LOG permissions default to members of the sysadmin fixed server role and the db_owner and db_backupoperator fixed database roles.

But there’s more to it than just permissions on the database itself: in order to complete successfully, the backup device must be accessible:

[…] SQL Server must be able to read and write to the device; the account under which the SQL Server service runs must have write permissions. […]

While this statement sound sensible or even obvious when talking about file system devices, with other types of device it’s less obvious what “permissions” means. With other types of device I mean tapes and Virtual Backup Devices. Since probably nobody uses tapes directly anymore, basically I’m referring to Virtual Backup Devices.

VDI (Virtual Backup device Interface) is the standard API intended for use by third-party backup software vendors to perform backup operations. Basically, it allows an application to act as a storage device.

The VDI specification is available here (you just need the vbackup.chm help file contained in the self-extracting archive).

If you download and browse the documentation, under the “Security” topic, you will find a worrying statement:

The server connection for SQL Server that is used to issue the BACKUP or RESTORE commands must be logged in with the sysadmin fixed server role.

Wait, … what???!?!??!! Sysadmin???????

Sad but true, sysadmin is the only way to let an application take backups using the VDI API. There is no individual permission you can grant: it’s sysadmin or nothing.

Since most third-party backup sofwares rely on the VDI API, this looks like a serious security flaw: every SQL Server instance around the world that uses third-party backup utilities has a special sysadmin login used by the backup tool, or, even worse, the tool runs under the sa login.

In my opinion, this is an unacceptable limitation and I would like to see a better implementation in a future version, so I filed a suggestion on Connect.

If you agree with me, feel free to upvote and comment it.

Replaying Workloads with Distributed Replay

A couple of weeks ago I posted a method to convert trace files from the SQL Server 2012 format to the SQL Server 2008 format.

The trick works quite well and the trace file can be opened with Profiler or with ReadTrace from RML Utilities. What doesn’t seem to work just as well is the trace replay with Ostress (another great tool bundled in the RML Utilities).

For some reason, OStress refuses to replay the whole trace file and starts throwing lots of errors.

Some errors are due to the workload I was replaying (it contains CREATE TABLE statements and that can obviuosly work just the first time it is issued), but some others seem to be due to parsing errors, probably because of differences in the trace format between version 11 and 10.

11/20/12 12:30:39.008 [0x00001040] File C:\RML\SQL00063.rml: Parser Error: [Error: 60500][State: 1][Abs Char: 1068][Seq: 0] Syntax error [parse error, expecting `tok_RML_END_RPC'] encountered near 0x0000042C: 6C000D00 0A005700 48004500 52004500 l.....W.H.E.R.E. 0x0000043C: 20005500 6E006900 74005000 72006900 .U.n.i.t.P.r.i. 0x0000044C: 63006500 20002600 6C007400 3B002000 c.e. .&.l.t.;. . 0x0000045C: 24003500 2E003000 30000D00 0A004F00 $.5...0.0.....O. 0x0000046C: 52004400 45005200 20004200 59002000 R.D.E.R. .B.Y. . 0x0000047C: 50007200 6F006400 75006300 74004900 P.r.o.d.u.c.t.I. 0x0000048C: 44002C00 20004C00 69006E00 65005400 D.,. .L.i.n.e.T. 0x0000049C: 6F007400 61006C00 3B000D00 0A003C00 o.t.a.l.;.....<. 0x000004AC: 2F004300 4D004400 3E000D00 0A003C00 /.C.M.D.>.....<. 0x000004BC: 2F004C00 41004E00 47003E00 0D000A00 /.L.A.N.G.>..... 0x000004CC: 11/20/12 12:30:39.010 [0x00001040] File C:\RML\SQL00063.rml: Parser Error: [Error: 110010][State: 100][Abs Char: 1068][Seq: 0] SYNTAX ERROR: Parser is unable to safely recover. Correct the errors and try again.

The error suggests that the two formats are indeed more different than I supposed, thus making the replay with Ostress a bit unrealiable.

Are there other options?

Sure there are! Profiler is another tool that allows replaying the workload, even if some limitations apply. For instance, Profiler cannot be scripted, which is a huge limitation if you are using Ostress in benchmarking script and want to replace it with something else.

That “something else” could actually be the Distributed Replay feature introduced in SQL Server 2012.

Basically, Distributed Replay does the same things that Ostress does and even more, with the nice addition of the possibility to start the replay on multiple machines, thus simulating a workload that resembles more the one found in production.

An introduction to Distributed Replay can be found on Jonathan Kehayias’ blog and I will refrain from going into deep details here: those posts are outstanding and there’s very little I could add to that.

Installing the Distributed Replay feature

The first step for the installation is adding a new user for the distributed replay services. You could actually use separate accounts for the Controller and Client services, but for a quick demo a single user is enough.

The Distributed Replay Controller and Client features must be selected from the Feature Selection dialog of SQLServer setup:

In the next steps of the setup you will also be asked the service accounts to use for the services and on the Replay Client page you will have to enter the controller name and the working directories.

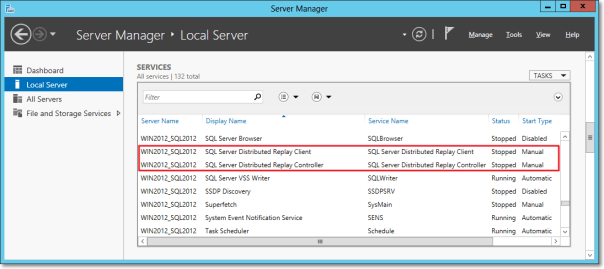

Once the setup is over, you will find two new services in the Server Manager:

After starting the services (first the Controller, then the Client), you can go to the log directories and check in the log files if everything is working.

The two files to check are in the following folders:

- C:\Program Files (x86)\Microsoft SQL Server\110\Tools\DReplayController\Log

- C:\Program Files (x86)\Microsoft SQL Server\110\Tools\DReplayClient\Log

Just to prove one more time that “if something can wrong, it will”, the client log will probably contain an obnoxious error message.

DCOM gotchas

Setting up the distributed replay services can get tricky because of some permissions needed to let the client connect to the controller. Unsurprisingly, the client/controller communication is provided by DCOM, which must be configured correctly.

Without granting the appropriate permissions, in the distributed replay client log file you may find the following message:

2012-11-03 00:43:04:062 CRITICAL [Client Service] [0xC8100005 (6)] Failed to connect controller with error code 0x80070005.

In practical terms, the service account that executes the distributed replay controller service must be granted permissions to use the DCOM class locally and through the network:

- Run dcomcnfg.exe

- Navigate the tree to Console Root, Component Services, Computers, My Computer, DCOM Config, DReplayController

- Right click DReplayController and choose “properties” from the context menu.

- Click the Security tab

- Click the “Launch and Activation Permissions” edit button and grant “Local Activation” and “Remote Activation” permissions to the service account

- Click the “Access Permissions” edit button and grant “Local Access” and “Remote Access” permissions to the service account

- Add the service user account to the “Distributed COM Users” group

- Restart the distributed replay controller and client services

After restarting the services, you will find that the message in the log file has changed:

2012-11-20 14:01:10:783 OPERATIONAL [Client Service] Registered with controller "WIN2012_SQL2012".

Using the Replay feature

Once the services are successfully started, we can now start using the Distributed Replay feature.

The trace file has to meet the same requirements for replay found in Profiler, thus making the “Replay” trace template suitable for the job.

But there’s one more step needed before we can replay the trace file, which cannot be replayed directly. In fact, distributed replay needs to work on a trace stub, obtained preprocessing the original trace file.

The syntax to obtain the stub is the following:

"C:\Program Files (x86)\Microsoft SQL Server\110\Tools\Binn\dreplay.exe" preprocess -i "C:\SomePath\replay_trace.trc" -d "C:\SomePath\preprocessDir"

Now that the trace stub is ready, we can start the replay admin tool from the command line, using the following syntax:

"C:\Program Files (x86)\Microsoft SQL Server\110\Tools\Binn\dreplay.exe" replay -s "targetServerName" -d "C:\SomePath\preprocessDir" -w "list,of,allowed,client,names"

A final word

A comparison of the features found in the different replay tools can be found in the following table:

| Profiler | Ostress | Distributed Replay | |

| Multithreading | YES | YES | YES |

| Debugging | YES | NO | NO |

| Synchronization mode | NO | YES | YES |

| Stress mode | YES | YES | YES |

| Distributed mode | NO | NO | YES |

| Scriptable | NO | YES | YES |

| Input format | Trace | Trace/RML/SQL | Trace |

The Distributed Replay Controller can act as a replacement for Ostress, except for the ability to replay SQL and RML files.

Will we be using RML Utilities again in the future? Maybe: it depends on what Microsoft decides to do with this tool. It’s not unlikely that the Distributed Replay feature will replace the RML Utilities entirely. The tracing feature itself has an unceartain future ahead, with the deprecation in SQL Server 2012. Probably this new feature will disappear in the next versions of SQLServer, or it will be ported to the Extended Events instrastructure, who knows?

One thing is sure: today we have three tools that support replaying trace files and seeing this possibilty disappear in the future would be very disappointing. I’m sure SQL Server will never disappoint us. 🙂

More on converting Trace Files

Yesterday I posted a method to convert trace files from SQL Server 2012 to SQL Server 2008R2 using a trace table.

As already mentioned in that post, having to load the whole file into a trace table has many shortcomings:

- The trace file can be huge and loading it into a trace table could take forever

- The trace data will consume even more space when loaded into a SQL Server table

- The table has to be written back to disk in order to obtain the converted file

- You need to have Profiler 2008 in order to write a trace in the “old” format

- You need to have a SQL Server 2008 instance to dump the trace table back to the file, as Profiler 2008 won’t connect to a SQL Server 2012

That said, it would really be useful if the file could be updated in place, changing just the version byte…

No worries: PowerShell to the rescue!

## ============================================= ## Author: Gianluca Sartori - @spaghettidba ## Create date: 2012-11-07 ## Description: Changes the version information ## in the header of a SQL Server trace ## ============================================= cls # Enter your filename here $fileName = "somefile.trc" # The version information we want to write: 0x0A = 10 = SQLServer 2008 [Byte[]] $versionData = 0x0A # The offset of the version information in the file $offset = 390 [System.IO.FileMode] $open = [System.IO.FileMode]::OpenOrCreate $stream = New-Object System.IO.FileStream -ArgumentList $fileName, $open $stream.Seek($offset, [System.IO.SeekOrigin]::Begin); $stream.Write($versionData, 0, $versionData.Length); $stream.Close()

This script overwrites the version byte in the trace file, which lies at offset 390, changing it from 11 to 10.

This way, even a huge 100 GB trace file can be converted in milliseconds, ready to be consumed by ReadTrace or Profiler.

Enjoy!

P.S.: I haven’t tried, but I guess it could work with previous versions. However, tracing is deprecated in SQL Server 2012 and I guess it doesn’t introduce many differences from the SQL Server 2008 trace file format. The same cannot be said for SQL Server 2005 and I suppose that the file wouldn’t open anyway in Profiler. Unfortunately, I don’t have a SQL Server 2005 around to test.

Convert a Trace File from SQLServer 2012 to SQLServer 2008R2

Recently I started using RML utilities quite a lot.

ReadTrace and Ostress are awesome tools for benchmarking and baselining and many of the features found there have not been fully implemented in SQLServer 2012, though Distributed Replay was a nice addition.

However, as you may have noticed, ReadTrace is just unable to read trace files from SQLServer 2012, so you may get stuck with a trace file you wont’ abe able to process.

When I first hit this issue, I immediately thought I could use a trace table to store the data and then use Profiler again to write back to a trace file.

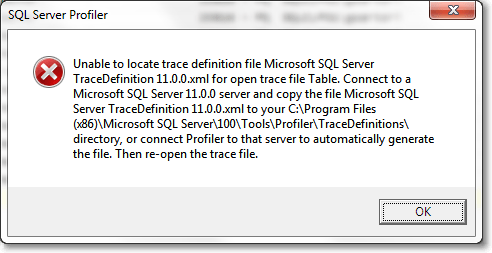

The idea wasn’t bad, but turns out that Profiler 2012 will always write trace files in the new format, with no way to specify the old one. On the other hand, Profiler2008R2 can’t read trace data from a table written by Profiler2012, throwing an ugly exception:

Interesting! So, looks like Profiler stores version information and other metadata somewhere in the trace table, but where exactly?

It might sound funny, but I had to trace Profiler with Profiler in order to know! Looking at the profiler trace, the first thing that Profiler does when trying to open a trace table is this:

declare @p1 int set @p1=180150003 declare @p3 int set @p3=2 declare @p4 int set @p4=4 declare @p5 int set @p5=-1 exec sp_cursoropen @p1 output,N'select BinaryData from [dbo].[trace_test] where RowNumber=0',@p3 output,@p4 output,@p5 output select @p1, @p3, @p4, @p5

So, looks like Profiler stores its metadata in the first row (RowNumber = 0), in binary format.

That was the clue I was looking for!

I loaded a trace file in the old format into another trace table and I started to compare the data to find similarities and differences.

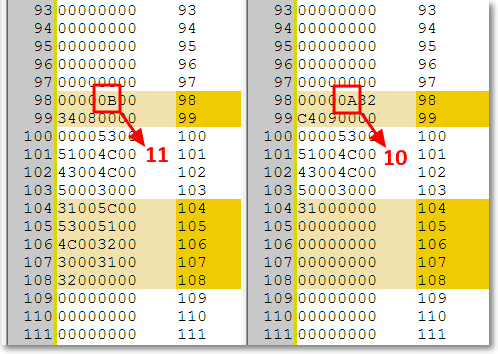

I decided to break the binary headers into Dwords and paste the results in WinMerge to hunt the differences:

-- Break the header row in the trace table into DWords -- in order to compare easily in WinMerge SELECT SUBSTRING(data, 8 * (n - 1) + 3, 8) AS dword ,n AS dwordnum FROM ( SELECT CONVERT(VARCHAR(max), CAST(binarydata AS VARBINARY(max)), 1) AS data FROM tracetable WHERE rownumber = 0 ) AS src INNER JOIN ( SELECT DISTINCT ROW_NUMBER() OVER ( ORDER BY (SELECT NULL) ) / 8 AS n FROM sys.all_columns AS ac ) AS v ON n > 0 AND (n - 1) * 8 <= LEN(data) - 3 ORDER BY 2

If you copy/paste the output in WinMerge you can easily spot the difference around the 100th dword:

Hmmmm, seems promising. Can those “11” and “10” possibly represent the trace version? Let’s try and see.

Now we should just update that section of the header to let the magic happen:

-- Use a table variable to cast the trace -- header from image to varbinary(max) DECLARE @header TABLE ( header varbinary(max) ) -- insert the trace header into the table INSERT INTO @header SELECT binarydata FROM tracetable WHERE RowNumber = 0 -- update the byte at offset 390 with version 10 (SQLServer 2008) -- instead of version 11 (SQLServer 2012) UPDATE @header SET header .WRITE(0x0A,390,1) -- write the header back to the trace table UPDATE tracetable SET binarydata = (SELECT header FROM @header) WHERE RowNumber = 0

The trace table can now be opened with Profiler2008R2 and written back to a trace file. Hooray!

Yes, I know, using a trace table can take a whole lot of time and consume a lot of disk space when the file is huge (and typically RML traces are), but this is the best I could come up with.

I tried to look into the trace file itself, but I could not find a way to diff the binary contents in an editor. You may be smarter than me a give it a try: in that case, please, post a comment here.

Using this trick, ReadTrace can happily process the trace file and let you perform your benchmarks, at least until Microsoft decides to update RML Utilities to 2012.

UPDATE 11/08/2012: The use of a trace table is not necessary: the trace file can be updated in place, using the script found here.

Replay a T-SQL batch against all databases

It’s been quite a lot since I last posted on this blog and I apologize with my readers, both of them :-).

Today I would like to share with you a handy script I coded recently during a SQL Server health check. One of the tools I find immensely valuable for conducting a SQL Server assessment is Glenn Berry’s SQL Server Diagnostic Information Queries. The script contains several queries that can help you collect and analyze a whole lot of information about a SQL Server instance and I use it quite a lot.

The script comes with a blank results spreadsheet, that can be used to save the information gathered by the individual queries. Basically, the spreadsheet is organized in tabs, one for each query and has no preformatted column names, so that you can run the query, select the whole results grid, copy with headers and paste everything to the appropriate tab.

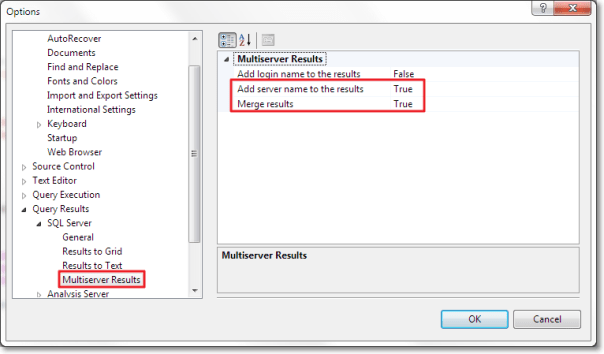

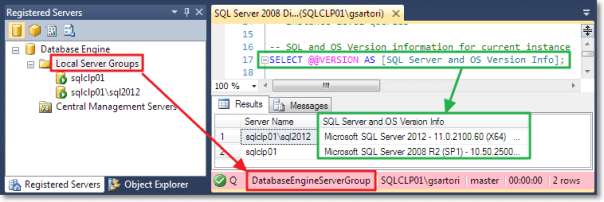

When working with multiple instances, SSMS can help automating this task with multiserver queries. Depending on your SSMS settings, the results of a multiserver query can be merged into a single grid, with an additional column holding the server name.

This feature is very handy, because it lets you run a statement against multiple servers without changing the statement itself.

This works very well for the queries in the first part of Glenn Berry’s script, which is dedicated to instance-level checks. The second part of the script is database-specific and you have to repeat the run+copy+paste process for each database in your instance.

It would be great if there was a feature in SSMS that allowed you to obtain the same results as the multiserver queries, scaled down to the database level. Unfortunately, SSMS has no such feature and the only possible solution is to code it yourself… or borrow my script!

Before rushing to the code, let’s describe briefly the idea behind and the challenges involved.

It would be quite easy to take a single statement and use it with sp_MsForEachDB, but this solution has several shortcomings:

- The results would display as individual grids

- There would be no easy way to determine which results grid belongs to which database

- The statement would have to be surrounded with quotes and existing quotes would have to be doubled, with an increased and unwanted complexity

The ideal tool for this task should simply take a statement and run it against all [user] databases without modifying the statement at all, merge the results in a single result set and add an additional column to hold the database name. Apparently, sp_MSForEachDB, besides being undocumented and potentially nasty, is not the right tool for the job.

That said, the only option left is to capture the statement from its query window, combining a trace, a loopback linked server and various other tricks.

Here’s the code:

-- =============================================

-- Author: Gianluca Sartori - @spaghettidba

-- Create date: 2012-06-26

-- Description: Records statements to replay

-- against all databases.

-- =============================================

CREATE PROCEDURE replay_statements_on_each_db

@action varchar(10) = 'RECORD',

@start_statement_id int = NULL,

@end_statement_id int = NULL

AS

BEGIN

SET NOCOUNT ON;

DECLARE @TraceFile nvarchar(256);

DECLARE @TraceFileNoExt nvarchar(256);

DECLARE @LastPathSeparator int;

DECLARE @TracePath nvarchar(256);

DECLARE @TraceID int;

DECLARE @fs bigint = 5;

DECLARE @r int;

DECLARE @spiid int = @@SPID;

DECLARE @srv nvarchar(4000);

DECLARE @ErrorMessage nvarchar(4000);

DECLARE @ErrorSeverity int;

DECLARE @ErrorState int;

DECLARE @sql nvarchar(max);

DECLARE @statement nvarchar(max);

DECLARE @column_list nvarchar(max);

IF @action NOT IN ('RECORD','STOPRECORD','SHOWQUERY','REPLAY')

RAISERROR('A valid @action (RECORD,STOPRECORD,SHOWQUERY,REPLAY) must be specified.',16,1)

-- *********************************************** --

-- * RECORD * --

-- *********************************************** --

IF @action = 'RECORD'

BEGIN

BEGIN TRY

-- Identify the path of the default trace

SELECT @TraceFile = path

FROM master.sys.traces

WHERE id = 1

-- Split the directory / filename parts of the path

SELECT @LastPathSeparator = MAX(number)

FROM master.dbo.spt_values

WHERE type = 'P'

AND number BETWEEN 1 AND LEN(@tracefile)

AND CHARINDEX('\', @TraceFile, number) = number

--' fix WordPress's sql parser quirks'

SELECT @TraceFile =

SUBSTRING(

@TraceFile

,1

,@LastPathSeparator

)

+ 'REPLAY_'

+ CONVERT(char(8),GETDATE(),112)

+ REPLACE(CONVERT(varchar(8),GETDATE(),108),':','')

+ '.trc'

SET @TraceFileNoExt = REPLACE(@TraceFile,N'.trc',N'')

-- create trace

EXEC sp_trace_create @TraceID OUTPUT, 0, @TraceFileNoExt, @fs, NULL;

--add filters and events

EXEC sp_trace_setevent @TraceID, 41, 1, 1;

EXEC sp_trace_setevent @TraceID, 41, 12, 1;

EXEC sp_trace_setevent @TraceID, 41, 13, 1;

EXEC sp_trace_setfilter @TraceID, 1, 0, 7, N'%fn_trace_gettable%'

EXEC sp_trace_setfilter @TraceID, 1, 0, 7, N'%replay_statements_on_each_db%'

EXEC sp_trace_setfilter @TraceID, 12, 0, 0, @spiid

--start the trace

EXEC sp_trace_setstatus @TraceID, 1

--create a global temporary table to store the statements

IF OBJECT_ID('tempdb..##replay_info') IS NOT NULL

DROP TABLE ##replay_info;

CREATE TABLE ##replay_info (

trace_id int,

statement_id int,

statement_text nvarchar(max)

);

--save the trace id in the global temp table

INSERT INTO ##replay_info (trace_id) VALUES(@TraceID);

END TRY

BEGIN CATCH

--cleanup the trace

IF EXISTS( SELECT 1 FROM sys.traces WHERE id = @TraceId AND status = 1 ) EXEC sp_trace_setstatus @TraceID, 0;

IF EXISTS( SELECT 1 FROM sys.traces WHERE id = @TraceId AND status = 0 ) EXEC sp_trace_setstatus @TraceID, 2;

IF OBJECT_ID('tempdb..##replay_info') IS NOT NULL

DROP TABLE ##replay_info;

SELECT @ErrorMessage = ERROR_MESSAGE(),

@ErrorSeverity = ERROR_SEVERITY(),

@ErrorState = ERROR_STATE();

RAISERROR(@ErrorMessage, @ErrorSeverity, @ErrorState);

END CATCH

END

-- *********************************************** --

-- * STOP RECORDING * --

-- *********************************************** --

IF @action = 'STOPRECORD'

BEGIN

BEGIN TRY

-- gather the trace id

SELECT @TraceID = trace_id

FROM ##replay_info;

IF @TraceId IS NULL

RAISERROR('No data has been recorded!',16,1)

DELETE FROM ##replay_info;

-- identify the trace file

SELECT TOP(1) @TraceFile = path

FROM sys.traces

WHERE path like '%REPLAY[_]______________.trc'

ORDER BY id DESC

-- populate the global temporary table with

-- the statements recorded in the

INSERT INTO ##replay_info

SELECT @TraceID,

ROW_NUMBER() OVER(ORDER BY (SELECT NULL)),

TextData

FROM fn_trace_gettable(@traceFile, DEFAULT)

WHERE TextData IS NOT NULL;

--stop and deltete the trace

IF EXISTS( SELECT 1 FROM sys.traces WHERE id = @TraceId AND status = 1 ) EXEC sp_trace_setstatus @TraceID, 0;

IF EXISTS( SELECT 1 FROM sys.traces WHERE id = @TraceId AND status = 0 ) EXEC sp_trace_setstatus @TraceID, 2;

END TRY

BEGIN CATCH

--stop and deltete the trace

IF EXISTS( SELECT 1 FROM sys.traces WHERE id = @TraceId AND status = 1 ) EXEC sp_trace_setstatus @TraceID, 0;

IF EXISTS( SELECT 1 FROM sys.traces WHERE id = @TraceId AND status = 0 ) EXEC sp_trace_setstatus @TraceID, 2;

SELECT @ErrorMessage = ERROR_MESSAGE(),

@ErrorSeverity = ERROR_SEVERITY(),

@ErrorState = ERROR_STATE();

RAISERROR(@ErrorMessage, @ErrorSeverity, @ErrorState);

END CATCH

END

-- *********************************************** --

-- * SHOW COLLECTED QUERIES * --

-- *********************************************** --

IF @action = 'SHOWQUERY'

BEGIN

BEGIN TRY

IF OBJECT_ID('tempdb..##replay_info') IS NULL

RAISERROR('No data has been recorded yet',16,1);

SET @sql = 'SELECT statement_id, statement_text FROM ##replay_info ';

IF @start_statement_id IS NOT NULL AND @end_statement_id IS NULL

SET @sql = @sql + ' WHERE statement_id = @start_statement_id ';

IF @start_statement_id IS NOT NULL AND @end_statement_id IS NOT NULL

SET @sql = @sql + ' WHERE statement_id

BETWEEN @start_statement_id AND @end_statement_id';

EXEC sp_executesql

@sql

,N'@start_statement_id int, @end_statement_id int'

,@start_statement_id

,@end_statement_id;

END TRY

BEGIN CATCH

SELECT @ErrorMessage = ERROR_MESSAGE(),

@ErrorSeverity = ERROR_SEVERITY(),

@ErrorState = ERROR_STATE();

RAISERROR(@ErrorMessage, @ErrorSeverity, @ErrorState);

END CATCH

END

-- *********************************************** --

-- * REPLAY * --

-- *********************************************** --

IF @action = 'REPLAY'

BEGIN

BEGIN TRY

--load the selected statement(s)

SET @statement = '

SET @sql = ''''

SELECT @sql += statement_text + '' ''

FROM ##replay_info

';

IF @start_statement_id IS NOT NULL AND @end_statement_id IS NULL

SET @statement =

@statement

+ ' WHERE statement_id = @start_statement_id ';

IF @start_statement_id IS NOT NULL AND @end_statement_id IS NOT NULL

SET @statement =

@statement

+ ' WHERE statement_id

BETWEEN @start_statement_id AND @end_statement_id';

EXEC sp_executesql

@statement

,N'@start_statement_id int, @end_statement_id int, @sql nvarchar(max) OUTPUT'

,@start_statement_id

,@end_statement_id

,@sql OUTPUT;

IF NULLIF(LTRIM(@sql),'') IS NULL

RAISERROR('Unable to locate the statement(s) specified.',16,1)

SET @srv = @@SERVERNAME; -- gather this server name

IF EXISTS (SELECT * FROM sys.servers WHERE name = 'TMPLOOPBACK')

EXEC sp_dropserver 'TMPLOOPBACK';

-- Create a loopback linked server

EXEC master.dbo.sp_addlinkedserver

@server = N'TMPLOOPBACK',

@srvproduct = N'SQLServ', -- it’s not a typo: it can’t be “SQLServer”

@provider = N'SQLNCLI', -- change to SQLOLEDB for SQLServer 2000

@datasrc = @srv;

-- Set the authentication to "current security context"

EXEC master.dbo.sp_addlinkedsrvlogin

@rmtsrvname = N'TMPLOOPBACK',

@useself = N'True',

@locallogin = NULL,

@rmtuser = NULL,

@rmtpassword = NULL;

-- Use a permanent table in Tempdb to store the output

IF OBJECT_ID('tempdb..___outputTable') IS NOT NULL

DROP TABLE tempdb..___outputTable;

-- Execute the statement in Tempdb to discover the column definition

SET @statement = '

SELECT TOP(0) *

INTO tempdb..___outputTable

FROM OPENQUERY(TMPLOOPBACK,''

SET FMTONLY OFF; EXEC tempdb.sys.sp_executesql N''''' + REPLACE(@sql,'''','''''''''') + '''''

'')

';

EXEC(@statement);

SET @statement = @sql;

-- Build the column list of the output table

SET @column_list = STUFF((

SELECT ',' + QUOTENAME(C.name)

FROM tempdb.sys.columns AS C

INNER JOIN tempdb.sys.tables AS T

ON C.object_id = T.object_id

WHERE T.name = '___outputTable'

FOR XML PATH('')

),1,1,SPACE(0));

-- Add a "Database Name" column

ALTER TABLE tempdb..___outputTable ADD Database__Name sysname;

-- Build a sql statement to execute

-- the recorded statement against all databases

SET @sql =

'N''INSERT tempdb..___outputTable(' + @column_list + ') EXEC(@statement); UPDATE tempdb..___outputTable SET Database__Name = DB_NAME() WHERE Database__Name IS NULL;''';

-- Build a statement to execute on each database context

;WITH dbs AS (

SELECT *,

system_db = CASE WHEN name IN ('master','model','msdb','tempdb') THEN 1 ELSE 0 END

FROM sys.databases

WHERE DATABASEPROPERTY(name, 'IsSingleUser') = 0

AND HAS_DBACCESS(name) = 1

AND state_desc = 'ONLINE'

)

SELECT @sql = (

SELECT

'EXEC ' + QUOTENAME(name) + '.sys.sp_executesql ' +

@sql + ',' +

'N''@statement nvarchar(max)'',' +

'@statement;' + char(10) AS [text()]

FROM dbs

ORDER BY name

FOR XML PATH('')

);

-- Execute multi-db sql and pass in the actual statement

EXEC sp_executeSQL @sql, N'@statement nvarchar(max)', @statement

--

SET @sql = '

SELECT Database__Name AS [Database Name], ' + @column_list + '

FROM tempdb..___outputTable

ORDER BY 1;

'

EXEC sp_executesql @sql;

EXEC tempdb.sys.sp_executesql N'DROP TABLE ___outputTable';

END TRY

BEGIN CATCH

SELECT @ErrorMessage = ERROR_MESSAGE(),

@ErrorSeverity = ERROR_SEVERITY(),

@ErrorState = ERROR_STATE();

RAISERROR(@ErrorMessage, @ErrorSeverity, @ErrorState);

END CATCH

END

END

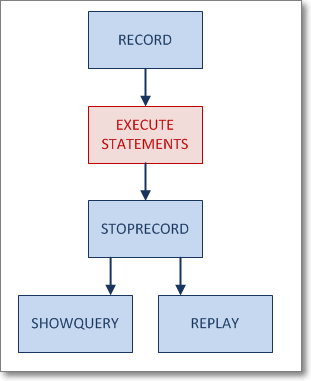

As you can see, the code creates a stored procedure that accepts a parameter named @action, which is used to determine what the procedure should do. Specialized sections of the procedure handle every possible value for the parameter, with the following logic:

First of all you start recording, then you execute the statements to repeat on each database, then you stop recording. From that moment on, you can enumerate the statements captured and execute them, passing a specific statement id or a range of ids.

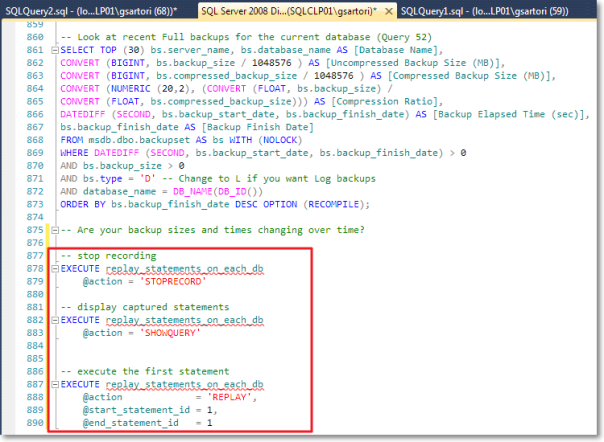

The typical use of the procedure could look like this:

-- start recording

EXECUTE replay_statements_on_each_db

@action = 'RECORD'

-- run the statements you want to replay

SELECT DATABASEPROPERTYEX(DB_NAME(),'Recovery') AS RecoveryModel

-- stop recording

EXECUTE replay_statements_on_each_db

@action = 'STOPRECORD'

-- display captured statements

EXECUTE replay_statements_on_each_db

@action = 'SHOWQUERY'

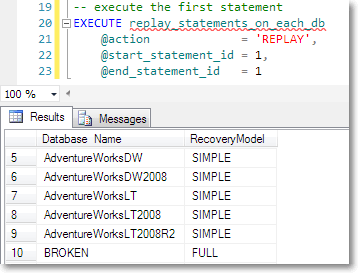

-- execute the first statement

EXECUTE replay_statements_on_each_db

@action = 'REPLAY',

@start_statement_id = 1,

@end_statement_id = 1

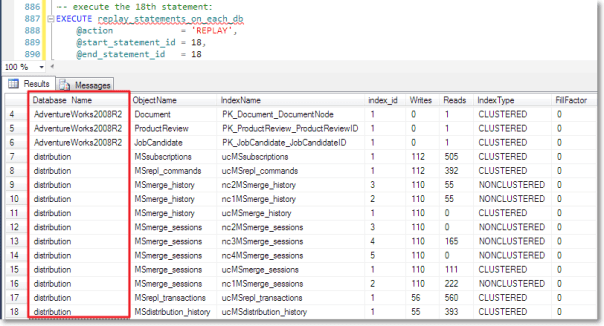

You can see the results of the script execution here:

Obviuosly this approach is totally overkill for just selecting the database recovery model, but it can become very handy when the statement’s complexity raises.

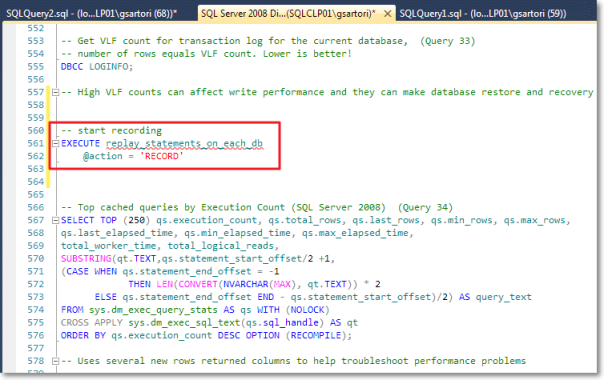

This seems a perfect fit for Glen Berry’s diagnostic queries, which is where we started from. You can go back to that script and add the record instructions just before the database specific queries start:

At the end of the script you can add the instructions to stop recording and show the queries captured by the procedure.

Once the statements are recorded, you can run any of the statements against all databases. For instance, I decided to run the top active writes index query (query 51).

As expected, the procedure adds the database name column to the result set and then displays the merged results.

You may have noticed that I skipped the first statement in the database-specific section of the script, which is a DBCC command. Unfortunately, not all kind of statement can be captured with this procedure, because some limitations apply. Besides the inability to capture some DBCC commands, please note that the column names must be explicitly set.

I think that a CLR procedure could overcome these limitations, or at least some of them. I hope I will find the time to try the CLR method soon and I promise I will blog the results.

Hide service accounts from Windows logon screen

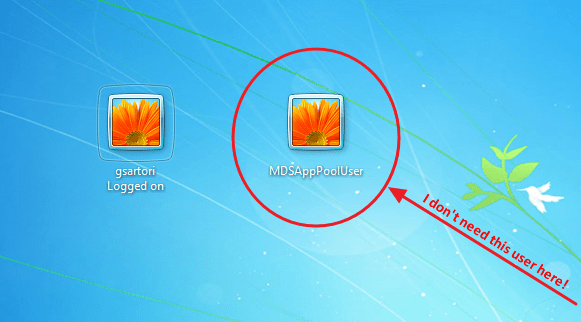

If you are playing with multiple Virtual Machines and multiple SQL Server instances and features, it’s very likely that your virtual machines logon screens are showing all the users you set up for service accounts.

In this case, my Master Data Services playground shows the “MDSAppPoolUser” I set up for the MDS web application:

Needless to say that I will never need to logon as one of those service accounts and I would be happier if the logon screen just hid them.

The good news is that Windows can do that, with a simple registry hack.

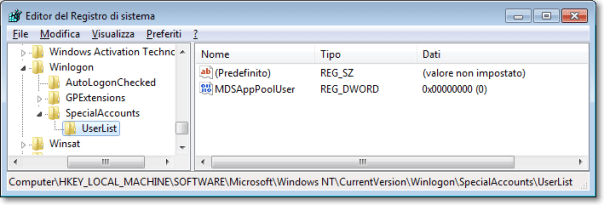

The registry key to add is the following:

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows NT\CurrentVersion\Winlogon\SpecialAccounts\UserList

Under the UserList key, you just have to add a REG_DWORD named after each user you want to hide, with a value of 0 (zero):

To verify that the service account user has been hidden from your logon screen, you can select “change user” from the start menu:

That’s it! No more service accounts on your logon screen.

If you want to re-enable those account on the logon screen, just change the DWORD value to 1 (one).

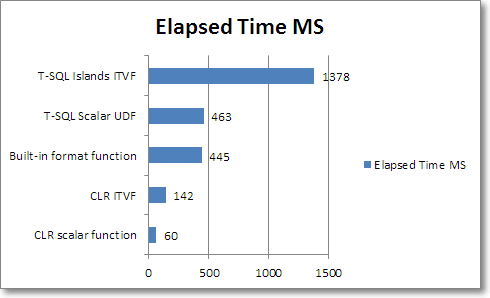

SQL Server and Custom Date Formats

Today SQL Server Central is featuring my article Dealing with custom date formats in T-SQL.

There’s a lot of code on that page and I thought that making it available for download would make it easier to play with.

You can download the code from this page or from the Code Repository.

- CustomDateFormat.cs

- formatDate_Islands_iTVF.sql

- formatDate_Recursive_iTVF.sql

- formatDate_scalarUDF.sql

- parseDate_Islands_iTVF.sql

I hope you enjoy reading the article as much as I enjoyed writing it.

How to Eat a SQL Elephant in 10 Bites

Sometimes, when you have to optimize a poor performing query, you may find yourself staring at a huge statement, wondering where to start.

Some developers think that a single elephant statement is better than multiple small statements, but this is not always the case.

Let’s try to look from the perspective of software quality:

- Efficiency

The optimizer will likely come up with a suboptimal plan, giving up early on optimizations and transformations. - Reliability

Any slight change in statistics could lead the optimizer to produce a different and less efficient plan. - Maintainability

A single huge statement is less readable and maintainable than multiple small statements.

With those points in mind, the only sensible thing to do is cut the elephant into smaller pieces and eat them one at a time.

This is how I do it:

- Lay out the original code and read the statement carefully

- Decide whether a full rewrite is more convenient

- Set up a test environment

- Identify the query parts

- Identify the main tables

- Identify non correlated subqueries and UNIONs

- Identify correlated subqueries

- Write a query outline

- Break the statement into parts with CTEs, views, functions and temporary tables

- Merge redundant subqueries

- Put it all together

- Verify the output based on multiple different input values

- Comment your work thoroughly

1. Lay out the original code and read the statement carefully

Use one of the many SQL formatters you can find online. My favorite one is Tao Klerk’s Poor Man’s T-SQL Formatter: it’s very easy to use and configure and it comes with a handy SSMS add-in and plugins for Notepad++ and WinMerge. Moreover, it’s free and open source. A must-have.

Once your code is readable, don’t rush to the keyboard: take your time and read it carefully.

- Do you understand (more or less) what it is supposed to do?

- Do you think you could have coded it yourself?

- Do you know all the T-SQL constructs it contains?

If you answered “yes” to all the above, you’re ready to go to the next step.

2. Decide whether a full rewrite is more convenient

OK, that code sucks and you have to do something. It’s time to make a decision:

- Take the business rules behind the statement and rewrite it from scratch

When the statement is too complicated and unreadable, it might be less time-consuming to throw the old statement away and write your own version.

Usually it is quite easy when you know exactly what the code is supposed to do. Just make sure you’re not making wrong assumptions and be prepared to compare your query with the original one many times. - Refactor the statement

When the business rules are unclear (or unknown) starting from scratch is not an option. No, don’t laugh! The business logic may have been buried in the sands of time or simply you may be working on a query without any will to understand the business processes behind it.

Bring a big knife: you’re going to cut the elephant in pieces. - Leave the statement unchanged

Sometimes the statement is too big or too complicated to bother taking the time to rewrite it. For instance, this query would take months to rewrite manually.

It works? Great: leave it alone.

3. Set up a test environment

It doesn’t matter how you decide to do it: at the end of the day you will have to compare the results of your rewritten query with the results of the “elephant” and make sure you did not introduce errors in your code.

The best way to do this is to prepare a script that compares the results of the original query with the results of your rewritten version. This is the script I am using (you will find it in the code repository, as usual).

-- =============================================

-- Author: Gianluca Sartori - spaghettidba

-- Create date: 2012-03-14

-- Description: Runs two T-SQL statements and

-- compares the results

-- =============================================

-- Drop temporary tables

IF OBJECT_ID('tempdb..#original') IS NOT NULL

DROP TABLE #original;

IF OBJECT_ID('tempdb..#rewritten') IS NOT NULL

DROP TABLE #rewritten;

-- Store the results of the original

-- query into a temporary table

WITH original AS (

<original, text, >

)

SELECT *

INTO #original

FROM original;

-- Add a sort column

ALTER TABLE #original ADD [______sortcolumn] int identity(1,1);

-- Store the results of the rewritten

-- query into a temporary table

WITH rewritten AS (

<rewritten, text, >

)

SELECT *

INTO #rewritten

FROM rewritten;

-- Add a sort column

ALTER TABLE #rewritten ADD [______sortcolumn] int identity(1,1);

-- Compare the results

SELECT 'original' AS source, *

FROM (

SELECT *

FROM #original

EXCEPT

SELECT *

FROM #rewritten

) AS A

UNION ALL

SELECT 'rewritten' AS source, *

FROM (

SELECT *

FROM #rewritten

EXCEPT

SELECT *

FROM #original

) AS B;

The script is a SSMS query template that takes the results of the original and the rewritten query and compares the resultsets, returning all the missing or different rows. The script uses two CTEs to wrap the two queries: this means that the ORDER BY predicate (if any) will have to be moved outside the CTE.

Also, the results of the two queries are piped to temporary tables, which means that you can’t have duplicate column names in the result set.

Another thing worth noting is that the statements to compare cannot be stored procedures. One simple way to overcome this limitation is to use the technique I described in this post.

The queries inside the CTEs should then be rewritten as:

SELECT * FROM OPENQUERY(LOOPBACK,'<original, text,>')

Obviously, all the quotes must be doubled, which is the reason why I didn’t set up the script this way in the first place. It’s annoying, but it’s the only way I know of to pipe the output of a stored procedure into a temporary table without knowing the resultset definition in advance. If you can do better, suggestions are always welcome.

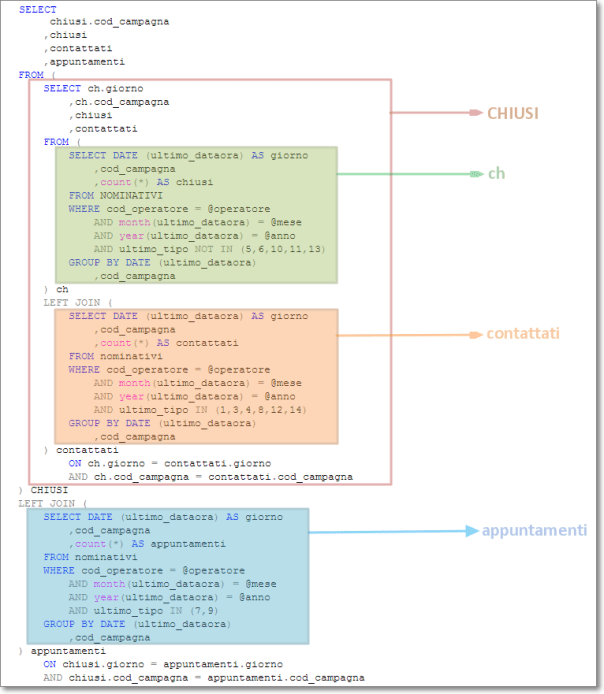

4. Identify the query parts

OK, now you have everything ready and you can start eating the elephant. The first thing to do is to identify all the autonomous blocks in the query and give them a name. You can do this at any granularity and repeat the task as many times as you like: the important thing is that at the end of this process you have a list of query parts and a name for each part.

Identify the main tables

Usually I like the idea that the data comes from one “main” table and all the rest comes from correlated tables. For instance, if I have to return a resultset containing some columns from the “SalesOrderHeader” table and some columns from the “SalesOrderDetail” table, I consider SalesOrderHeader the main table and SalesOrderHeader a correlated table. It fits well with my mindset, but you are free to see things the way you prefer.

Probably these tables are already identified by an alias: note down the aliases and move on.

Identify non correlated subqueries and UNIONs

Non-correlated subqueries are considered as inline views. Often these subqueries are joined to the main tables to enrich the resultset with additional columns.

Don’t be scared away by huge subqueries: you can always repeat all the steps for any single subquery and rewrite it to be more compact and readable.

Again, just note down the aliases and move to the next step.

Identify correlated subqueries

Correlated subqueries are not different from non-correlated subqueries, with the exception that you will have less freedom to move them from their current position in the query. However, that difference doesn’t matter for the moment: give them a name and note it down.

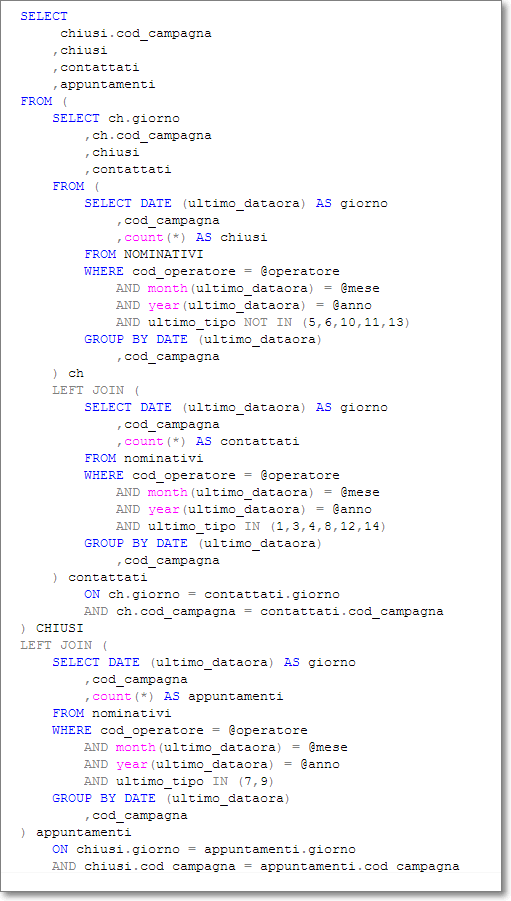

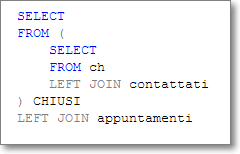

5. Write a query outline

Use the names you identified in the previous step and write a query outline. It won’t execute, but it gives you the big picture.

If you really want the big picture, print the query. It may seem crazy, but sometimes I find it useful to be able to see the query as a whole, with all the parts with their names highlighted in different colors.

Yes, that’s a single SELECT statement, printed in Courier new 8 pt. on 9 letter sheets, hanging on the wall in my office.

6. Break the statement in parts with CTEs, views, functions and temporary tables

SQL Server offers a fair amount of tools that allow breaking a single statement into parts:

- Common Table Expressions

- Subqueries

- Views

- Inline Table Valued Functions

- Multi-Statement Table Valued Functions

- Stored procedures

- Temporary Tables

- Table Variables

Ideally, you will choose the one that performs best in your scenario, but you could also take usability and modularity into account.

CTEs and subqueries are a good choice when the statement they contain is not used elsewhere and there is no need to reuse that code.

Table Valued functions and views, on the contrary, are most suitable when there is an actual need to incapsulate the code in modules to be reused in multiple places.

Generally speaking, you will use temporary tables or table variables when the subquery gets used more than once in the statement, thus reducing the load.

Though I would really like to go into deeper details on the performance pros and cons of each construct, that would take an insane amount of time and space. You can find a number of articles and blogs on those topics and I will refrain from echoing them here.

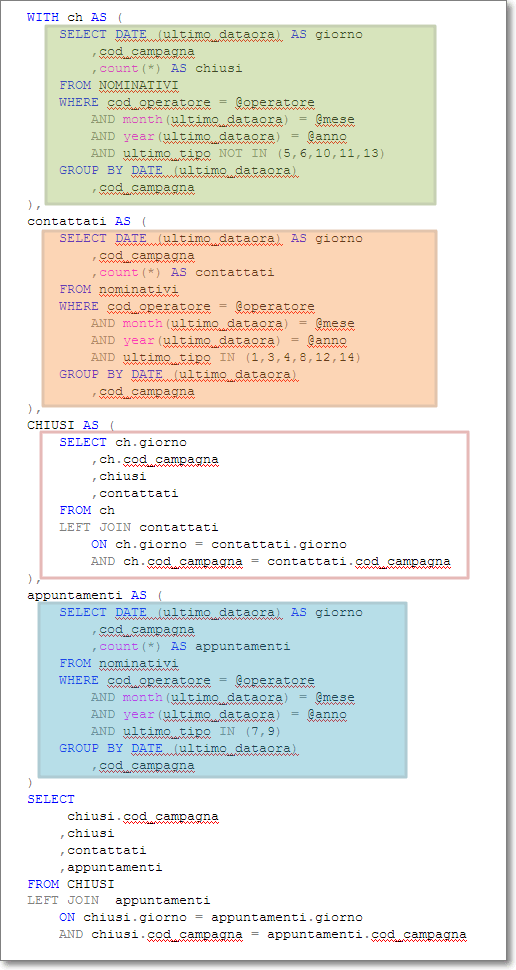

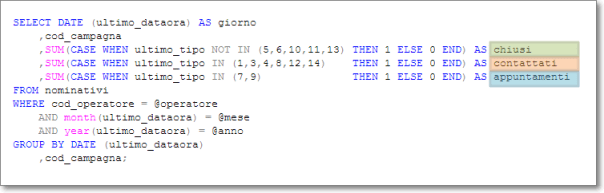

7. Merge redundant subqueries

Some parts of your query may be redundant and you may have the opportunity to merge those parts. The merged query will be more compact and will likely perform significantly better.

For instance, you could have multiple subqueries that perform aggregate calculations on the same row set:

SELECT ProductID

,Name

,AverageSellOutPrice = (

SELECT AVG(UnitPrice)

FROM Sales.SalesOrderDetail

WHERE ProductID = PR.ProductID

)

,MinimumSellOutPrice = (

SELECT MIN(UnitPrice)

FROM Sales.SalesOrderDetail

WHERE ProductID = PR.ProductID

)

,MaximumSellOutPrice = (

SELECT MAX(UnitPrice)

FROM Sales.SalesOrderDetail

WHERE ProductID = PR.ProductID

)

FROM Production.Product AS PR;

The above query can be rewritten easily to avoid hitting the SalesOrderDetail table multiple times:

SELECT ProductID

,Name

,AverageSellOutPrice

,MinimumSellOutPrice

,MaximumSellOutPrice

FROM Production.Product AS PR

CROSS APPLY (

SELECT AVG(UnitPrice), MIN(UnitPrice), MAX(UnitPrice)

FROM Sales.SalesOrderDetail

WHERE ProductID = PR.ProductID

) AS SellOuPrices (AverageSellOutPrice, MinimumSellOutPrice, MaximumSellOutPrice);

Another typical situation where you can merge some parts is when multiple subqueries perform counts on slightly different row sets:

SELECT ProductID

,Name

,OnlineOrders = (

SELECT COUNT(*)

FROM Sales.SalesOrderHeader AS SOH

WHERE SOH.OnlineOrderFlag = 1

AND EXISTS (

SELECT *

FROM Sales.SalesOrderDetail

WHERE SalesOrderID = SOH.SalesOrderID

AND ProductID = PR.ProductID

)

)

,OfflineOrders = (

SELECT COUNT(*)

FROM Sales.SalesOrderHeader AS SOH

WHERE SOH.OnlineOrderFlag = 0

AND EXISTS (

SELECT *

FROM Sales.SalesOrderDetail

WHERE SalesOrderID = SOH.SalesOrderID

AND ProductID = PR.ProductID

)

)

FROM Production.Product AS PR;

The only difference between the two subqueries is the predicate on SOH.OnlineOrderFlag. The two queries can be merged introducing a CASE expression in the aggregate:

SELECT ProductID

,Name

,ISNULL(OnlineOrders,0) AS OnlineOrders

,ISNULL(OfflineOrders,0) AS OfflineOrders

FROM Production.Product AS PR

CROSS APPLY (

SELECT SUM(CASE WHEN SOH.OnlineOrderFlag = 1 THEN 1 ELSE 0 END),

SUM(CASE WHEN SOH.OnlineOrderFlag = 0 THEN 1 ELSE 0 END)

FROM Sales.SalesOrderHeader AS SOH

WHERE EXISTS (

SELECT *

FROM Sales.SalesOrderDetail

WHERE SalesOrderID = SOH.SalesOrderID

AND ProductID = PR.ProductID

)

) AS Orderscount (OnlineOrders, OfflineOrders);

There are infinite possibilities and enumerating them all would be far beyond the scope of this post. This is one of the topics that my students often find hard to understand and I realize that it really takes some experience to identify merge opportunities and implement them.

8. Put it all together

Remember the query outline you wrote previously? It’s time to put it into action.

Some of the identifiers may have gone away in the merge process, some others are still there and have been transformed into different SQL constructs, such as CTEs, iTVFs or temporary tables.

9. Verify the output based on multiple different input values

Now it’s time to see if your new query works exactly like the original one. You already have a script for that: you can go on and use it.

Remember that the test can be considered meaningful only if you repeat it a reasonably large number of times, with different parameters. Some queries could appear to be identical, but still be semantically different. Make sure the rewritten version handles NULLs and out-of-range parameters in the same way.

10.Comment your work thoroughly

If you don’t comment your work, somebody will find it even more difficult to maintain than the elephant you found when you started.

Comments are for free and don’t affect the query performance in any way. Don’t add comments that mimic what the query does, instead, write a meaningful description of the output of the query.

For instance, given a code fragment like this:

SELECT SalesOrderID, OrderDate, ProductID

INTO #orders

FROM Sales.SalesOrderHeader AS H

INNER JOIN Sales.SalesOrderDetail AS D

ON H.SalesOrderID = D.SalesOrderID

WHERE OrderDate BETWEEN @StartDate AND @EndDate

a comment like “joins OrderHeader to OrderDetail” adds nothing to the clarity of the code. A comment like “Selects the orders placed between the @StartDate and @EndDate and saves the results in a temporary table for later use” would be a much better choice.

Elephant eaten. (Burp!)

After all, it was not too big, was it?

SQL Server 2012 RTM

Today Microsoft announced that SQL Server 2012 codename “Denali” has Released To Manufacturing and will be available for download starting from April 1.

April fool’s? I don’t think so. Time will tell.

One thing is sure: each and every bug discovered in this version will be ascribed to that unusual release date. 🙂

If you’re a MSDN/TechNet subscriber, you can download them as of March 7. Yes, tomorrow! Keep your fingers ready to push the download button!

Mirrored Backups: a useful feature?

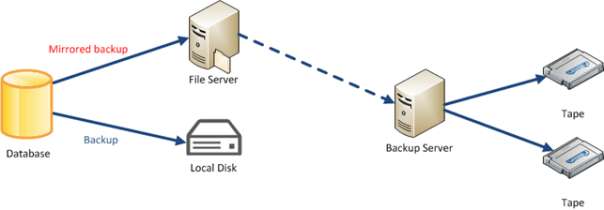

One of the features found in the Enterprise Edition of SQL Server is the ability to take mirrored backups. Basically, taking a mirrored backup means creating additional copies of the backup media (up to three) using a single BACKUP command, eliminating the need to perform the copies with copy or robocopy.

The idea behind is that you can backup to multiple locations and increase the protection level by having additional copies of the backup set. In case one of the copies gets lost or corrupted, you can use the mirrored copy to perform a restore.

BACKUP DATABASE [AdventureWorks2008R2] TO DISK = 'C:\backup\AdventureWorks2008R2.bak' MIRROR TO DISK = 'H:\backup\AdventureWorks2008R2.bak' WITH FORMAT; GO

Another possible scenario for a mirrored backup is deferred tape migration: you can backup to a local disk and mirror to a shared folder on a file server. That way you could have a local copy of the backup set and restore it in case of need and let the mirrored copy migrate to tape when the disk backup software processes the file server’s disks.

Mirrored backup sets can be combined with striped backups, given that all the mirror copies contain the same number of stripes:

BACKUP DATABASE [AdventureWorks2008R2] TO DISK = 'C:\backup\AdventureWorks2008R2_1.bak', DISK = 'C:\backup\AdventureWorks2008R2_2.bak', DISK = 'C:\backup\AdventureWorks2008R2_3.bak' MIRROR TO DISK = 'H:\AdventureWorks2008R2_1.bak', DISK = 'H:\AdventureWorks2008R2_2.bak', DISK = 'H:\AdventureWorks2008R2_3.bak' WITH FORMAT; GO

When restoring from a striped + mirrored backup set, you can mix the files from one media with the files from another media, as each mirrored copy is an exact copy of the main backup set.

RESTORE DATABASE [AW_Restore] FROM DISK = N'C:\backup\AdventureWorks2008R2_1.bak', -- main media DISK = N'H:\AdventureWorks2008R2_2.bak', -- mirror media DISK = N'H:\AdventureWorks2008R2_3.bak' -- mirror media WITH FILE = 1, MOVE N'AdventureWorks2008R2_Data' TO N'C:\DATA\AW_Restore.mdf', MOVE N'AdventureWorks2008R2_Log' TO N'C:\DATA\AW_Restore_1.ldf', MOVE N'FileStreamDocuments2008R2' TO N'C:\DATA\AW_Restore_2.Documents2008R2', NOUNLOAD, STATS = 10; GO

Looks like a handy feature! However, some limitations apply:

- If striped, the mirror must contain the same number of stripes.

Looks sensible: each mirror copy is an exact copy of the main backup set, which would be impossible with a different number of devices. - Must be used with FORMAT option.

No append supported: the destination device must be overwritten. - Destination media must be of the same type.

You cannot use disk and tape together. I can understand the reason for this restriction, but, actually, it makes this feature much less useful than it could be. - Fails the backup if ANY of the mirrored copies fails.

This is the main pain point: creating multiple copies of the same backup set can end up reducing the protection level, because the whole backup process fails when at least one of the destination media is unavailable or faulty.

Does this mean that the ability to take mirrored backups is a useless feature?

Well, it highly depends on your point of view and what matters to you most. I would prefer having at least one copy of the database backup available rather than no backup at all.

Keeping in mind that:

- the same exact result can be accomplished using copy, xcopy or robocopy

- non-local copies are much more likely to fail rather than local copies

- taking multiple local copies is quite pointless

- Enterprise Edition costs a lot of money

- There’s no GUI in SSMS backup dialog, nor in Maintenance Plans

…I think I could live without this feature. At least, this is not one of the countless reasons why I would prefer Enterprise over cheaper editions.