Blog Archives

Replaying Workloads to a different Database

One of the features I was asked to implement for WorkloadTools is the ability to replay commands to a database name different from the one recorded in the source workload.

This is something that I had been planning to implement for a while and it totally makes sense. Usually, you have two identical environments for the workload capture and replay, both with the same databases. Sometimes it makes sense to have two different databases as the source and target for the workload, for some particular reasons: resources constraints, ease of testing and so on.

WorkloadTools now supports replaying commands to a different database, using the DatabaseMap property of the ReplayConsumer.

DatabaseMap is a Dictionary of strings, so it can be expressed in the .json file as a key/value pair, where the key is the original database and the value is the new target database for the command.

Here is an example:

{

"__type": "ReplayConsumer",

"ConnectionInfo": {

"ServerName": "somedatabase.database.windows.net",

"DatabaseName": "mario",

"UserName": "itsame",

"Password": "itsamario"

},

"DatabaseMap": {

"Mario": "Luigi",

"Peach": "Bowser"

}

}

In this case, whenever a command from the database “Mario” is found, it is replayed against the database “Luigi”. Similarly, when the database “Peach” is found, the command gets replayed on “Bowser”.

Please note that DatabaseMap only changes the database context and does not substitute any reference to the original database name in the code. For instance, if you had something like EXEC Mario.sys.sp_executesql 'SELECT 1' ,this would not be intercepted by DatabaseMap and would remain unchanged in your code.

Happy benchmarking with WorkladTools!

Performing a real-time replay with WorkloadTools

In a previous blog post, I showed you how to use WorkloadTools to replay a workload in two different scenarios. However, there is a third scenario that is worth exploring: the real-time replay.

Before we jump to how, I’d better spend some words on why a real-time replay is needed.

The main reason is the complexity involved in capturing and analyzing a workload for extended periods of time. Especially when performing migrations and upgrades, it is crucial to capture the entire business cycle, in order to cover all possible queries issued by the applications. All existing benchmarking tools require to capture the workload to a file before it can be analyzed and/or replayed, but this becomes increasingly complicated when the length of the business cycle grows.

The first complication has to do with the size of the trace files, that will have to be accommodated to a disk location, either local or remote. It is not reasonable to expect to capture a workload on a busy server for, let’s say two weeks, because the size of the trace files can easily get to a few hundred GBs in less than one hour.

The second complication has to do with the ability of the benchmarking tools to process the trace files: bigger and more numerous files increase enormously the chances of breaking the tools. If you ever captured a big workload to a set of trace files to feed it to ReadTrace, you probably know what I’m talking about and chances are that you witnessed a crash or two. If you tried it with DReplay, you now probably have an ample collection of exotic and unhelpful error messages.

In this context, being able to process the events as soon as they occur is a plus, so that storing them to a file of any type is not needed. This is exactly what WorkloadTools does with the real-time replay feature.

Performing a real-time replay

All the considerations made for replaying a saved workload also apply to this scenario. First of all, you will need to set up a target environment that contains an up to date copy of the production database. Log shipping is a great tool for this: you can restore a full backup from production and restore all logs until the two databases are in sync. Immediately after restoring the last log backup with recovery, you can start the capture and replay on the production server.

The .json file for this activity will probably look like this:

{

"Controller": {

"Listener":

{

"__type": "ExtendedEventsWorkloadListener",

"ConnectionInfo":

{

"ServerName": "SourceInstance"

},

"DatabaseFilter": "YourDatabase"

},

"Consumers":

[

{

"__type": "ReplayConsumer",

"ConnectionInfo":

{

"ServerName": "TargetInstance",

"DatabaseName": "YourDatabase"

}

},

{

"__type": "AnalysisConsumer",

"ConnectionInfo":

{

"ServerName": "AnalysisInstance",

"DatabaseName": "SqlWorkload",

"SchemaName": "baseline"

},

"UploadIntervalSeconds": 60

}

]

}

}

On the target server, you can use SqlWorkload again to capture the performance data produced by the replay, using a .json file similar to the one used when analyzing the replay of a saved workload:

{

"Controller": {

"Listener":

{

"__type": "ExtendedEventsWorkloadListener",

"ConnectionInfo":

{

"ServerName": "TargetInstance",

"DatabaseName": "YourDatabase"

}

},

"Consumers":

[

{

"__type": "AnalysisConsumer",

"ConnectionInfo":

{

"ServerName": "AnalysisInstance",

"DatabaseName": "SqlWorkload",

// different schema from SqlWorkload 1

"SchemaName": "replay"

},

"UploadIntervalSeconds": 60

}

]

}

}

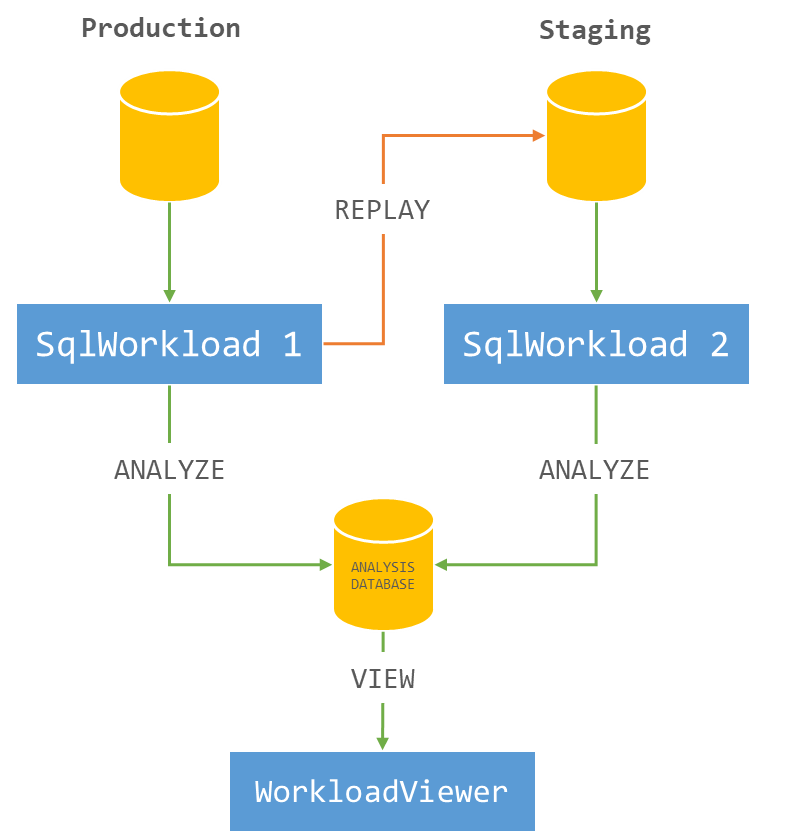

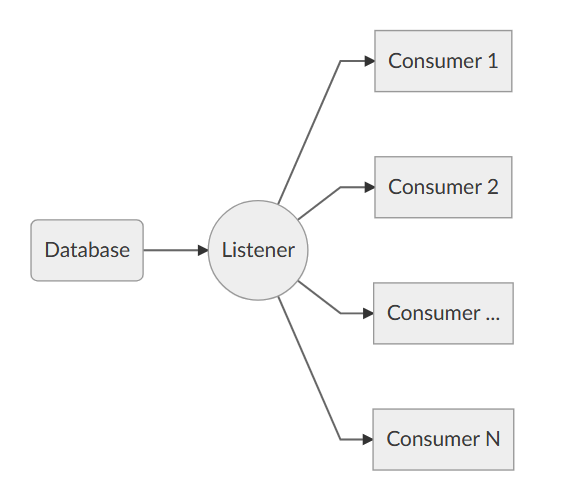

The overall architecture of the real-time replay looks like this:

It is crucial to start both instances of SqlWorkload at the same time, as the time dimension is always measured as the offset from the start of the analysis: starting both instances at the same time ensures that the same queries get executed around the same offset, so that you can compare apples to apples.

It is also extremely important to make sure that the target environment can keep up with the workload being replayed, otherwise the number of queries found in the same interval will never match between the two environments and the two workloads will start to diverge more and more. You can observe the data in WorkloadViewer while is gets written by the two analysis consumers and you can compare the number of batches per seconds to make sure that the target environment does not get overwhelmed by the workload. To refresh the data in WorkloadViewer, simply press F5.

The analysis and comparison of a real-time replay is not different from a deferred replay and you can use the same tools and apply the same considerations to both situations.

The interesting part of a real-time replay is the ability to perform the replay for extended periods of time, without the need to store the workload data to any type of intermediate format and without the need to analyze the workload data as a whole before you can proceed with the replay. The possibilities that this approach opens are really interesting and can be outside the usual scope of benchmarking tools.

As an example, you could decide to have a staging environment where you want to test the performance impact of new implementations directly against a production workload, gaining immediate insights regarding performance and catching runaway queries before they hit production. The traditional approach to this problem has always been based on test harnesses that simulate the critical parts of the workload, but building and maintaining these tools can be time consuming. With WorkloadTools you can measure the performance impact of your changes without having to build new tools and you can focus on what matters to you the most: your business.

Workload replay with WorkloadTools

In my last post, I described how to capture a workload to a file, in order to run a replay against your target environment at a later time. Well, that later time has come and you’re ready to roll.

Of course, WorkloadTools has got you covered.

Before I show you how SqlWorkload can run the replay, reading all data from the workload file, I need to spend some time describing how to set up your target environment. It may look superfluous, but getting this part right is they key to a successful benchmarking activity and allows you to make sure that you are comparing apples with apples.

Choosing a methodology

First of all, you need to decide what you want to discover and make sure you understand entirely how performing the replay will help you in your investigation. There are mainly two types of methodologies:

- Capture in production, analyze the workload, replay in test, analyze and compare the results

- Capture in production, replay and analyze in test to establish a baseline, change something and replay again in test to obtain a second benchmark, then compare the results

The first method is useful when you are interested in comparing two different scenarios that cannot be easily reproduced in a test environment. As an example of this situation, imagine a production server that sits on a SAN storage with no more space available to create a test environment. Management wants to buy a new SAN and obtains a box to conduct a POC. In this case you can set up a test environment on the new SAN and compare the benchmarks on the two different storages.

This way of benchmarking is not always ideal, because it tries to compare a workload captured in production with a workload captured as the replay of the production one. The two are not the same: they depend on the filters applied while capturing in production and can be affected by the conditions under which the replay is being performed. For this reason, this methodology should be used only when it is possible to accept the approximation due to resource constraints.

The second method is more convoluted, but it is often able to deliver more accurate results. With this method, both benchmarks are obtained by measuring the replay of the original workload in a controlled test environment, so that the way the replay itself is performed does not affect the comparison.

This second method is easier to use in situations when the test environment can be reused to obtain the two scenarios to measure. Imagine that you want to observe the effect of changing compatibility level or some other database level options: in this case you would need to replay the original workload, change compatibility level, run a second replay and compare the performance in the two scenarios.

However, not even this method is perfect and you really need to make sure that you understand what you want to measure. If you are looking for plan regressions due to changing something at the instance, database or object level, you probably don’t care much about the relative performance of the hardware, because it is unlikely to affect query performance more than the plan regression itself.

Setting up the environment

Another thing that has to be taken into account is what data the replay will be performed against. In order to obtain meaningful performance information, the workload should ideally be performed against the same database, with the data in the same exact state in both environments.

Working on data in different states can produce misleading results. Imagine that the production workload contains thousands of commands that operate changes to a particular order in a database for an e-commerce website: if you tried to replay that workload against a copy of the database taken one week before the order was created, you would not produce the same amount of reads and writes found in the production workload. This means that the two databases have to be synchronized, by performing a point int time restore in the test environment up to the moment in which the capture of the production workload has started.

If you have to replay the workload multiple times, it is recommended to take a database snapshot before you start the replay, so that you can revert to that snapshot before repeating the replay.

Replaying a Workload from production

In this case, the workload that you capture in production will act as the baseline and will be compared to the workload captured in test when performing the replay. WorkloadTools lets you choose when to analyze the source workload: you can do that during the workload capture, you can do that while performing the replay or you can do that at a later moment. In the first case, you just need to add a second consumer to the listener and let it write the performance data to a schema in the analysis database.

{

"Controller": {

// This listener connects to the source instance

// using Extended Events

"Listener":

{

"__type": "ExtendedEventsWorkloadListener",

"ConnectionInfo":

{

"ServerName": "SourceInstance"

},

"DatabaseFilter": "YourDatabase"

},

"Consumers":

[

// This consumer analyzes the workload and saves

// the analysis to a database, in the schema “baseline”

{

"__type": "AnalysisConsumer",

"ConnectionInfo":

{

"ServerName": "AnalysisInstance",

"DatabaseName": "SqlWorkload",

"SchemaName": "baseline"

},

"UploadIntervalSeconds": 60

},

// This consumer writes the workload to a file

{

"__type": "WorkloadFileWriterConsumer",

"OutputFile": "C:\\temp\\SqlWorkload.sqlite"

}

]

}

}

If you decide to analyze the workload later, you can start a file listener and feed the events to an analysis consumer. This setup can come handy when the analysis database is not reachable from the machine where the capture is being performed. This is an example of how to perform the analysis using a workload file as the source:

{

"Controller": {

"Listener":

{

"__type": "FileWorkloadListener",

"Source": "C:\\temp\\SqlWorkload.sqlite",

"SynchronizationMode": "false"

},

"Consumers":

[

{

"__type": "AnalysisConsumer",

"ConnectionInfo":

{

"ServerName": "AnalysisInstance",

"DatabaseName": "SqlWorkload",

"SchemaName": "baseline"

},

"UploadIntervalSeconds": 60

}

]

}

}

Another option is to analyze the source workload while performing the replay. Here is a sample json file for that:

{

"Controller": {

"Listener":

{

"__type": "FileWorkloadListener",

"Source": "C:\\temp\\SqlWorkload.sqlite",

// in this case you want to simulate the original query rate

"SynchronizationMode": "true"

},

"Consumers":

[

{

"__type": "ReplayConsumer",

"ConnectionInfo":

{

"ServerName": "TargetInstance",

"DatabaseName": "YourDatabase"

}

},

{

"__type": "AnalysisConsumer",

"ConnectionInfo":

{

"ServerName": "AnalysisInstance",

"DatabaseName": "SqlWorkload",

"SchemaName": "baseline"

},

"UploadIntervalSeconds": 60

}

]

}

}

The replay workload has to be captured and analyzed as well, but you don’t need to record the queries to a workload file, because you are only after the performance data and you don’t need to replay the queries captured in this environment. All you need in this case is an instance of SqlWorkload with a listener connected to the test environment and a consumer to perform the analysis.

{

"Controller": {

// This listener points to the target instance

// where the replay is being performed

"Listener":

{

"__type": "ExtendedEventsWorkloadListener",

"ConnectionInfo":

{

"ServerName": "TargetInstance",

"DatabaseName": "DS3"

}

},

"Consumers":

[

{

"__type": "AnalysisConsumer",

"ConnectionInfo":

{

"ServerName": "AnalysisInstance",

"DatabaseName": "SqlWorkload",

"SchemaName": "replay"

},

"UploadIntervalSeconds": 60

}

]

}

}

The analysis data can be saved to the same target database used for the production workload, but it is not a requirement. In case you decide to use the same database, the target schema needs to be different.

Recording multiple benchmarks for the same workload

In this case, the workload captured in production will not be used as the baseline, but the baseline will be obtained by replaying it. This means that you don’t need to analyze the source workload and all you need to do is record it to a file.

Pointing to the target environment, you will need an instance of SqlWorkload with a listener configured to read the workload file and replay the events using a replay consumer.

{

"Controller": {

"Listener":

{

"__type": "FileWorkloadListener",

"Source": "C:\\temp\\SqlWorkload.sqlite",

// in this case you want to simulate the original query rate

"SynchronizationMode": "true"

},

"Consumers":

[

{

"__type": "ReplayConsumer",

"ConnectionInfo":

{

"ServerName": "TargetInstance",

"DatabaseName": "YourDatabase"

}

}

]

}

}

In the same environment, you will have another instance of SqlWorkload with a listener capturing the events being replayed and an analysis consumer to write the performance data to an analysis database.

{

"Controller": {

// This listener points to the target instance

// where the replay is being performed

"Listener":

{

"__type": "ExtendedEventsWorkloadListener",

"ConnectionInfo":

{

"ServerName": "TargetInstance",

"DatabaseName": "DS3"

}

},

"Consumers":

[

{

"__type": "AnalysisConsumer",

"ConnectionInfo":

{

"ServerName": "AnalysisInstance",

"DatabaseName": "SqlWorkload",

"SchemaName": "benchmark01"

},

"UploadIntervalSeconds": 60

}

]

}

}

In order to obtain the second benchmark, you will now need to rewind the database to its initial state by performing a restore (using backups or a snapshot) and then you are ready to perform replay and capture once again. The .json files to use are almost identical to the ones that you used to obtain the first benchmark, except that you will need to specify a different schema to save the workload analysis.

{

"Controller": {

// This listener points to the target instance

// where the replay is being performed

"Listener":

{

"__type": "ExtendedEventsWorkloadListener",

"ConnectionInfo":

{

"ServerName": "TargetInstance",

"DatabaseName": "DS3"

}

},

"Consumers":

[

{

"__type": "AnalysisConsumer",

"ConnectionInfo":

{

"ServerName": "AnalysisInstance",

"DatabaseName": "SqlWorkload",

"SchemaName": "benchmark02"

},

"UploadIntervalSeconds": 60

}

]

}

}

Comparing benchmarks using WorkloadViewer

Regardless of the method that you decided to use, at the end of the replays, you will have two distinct sets of tables containing the workload analysis data, sitting in different schemas in the same database or in completely different databases.

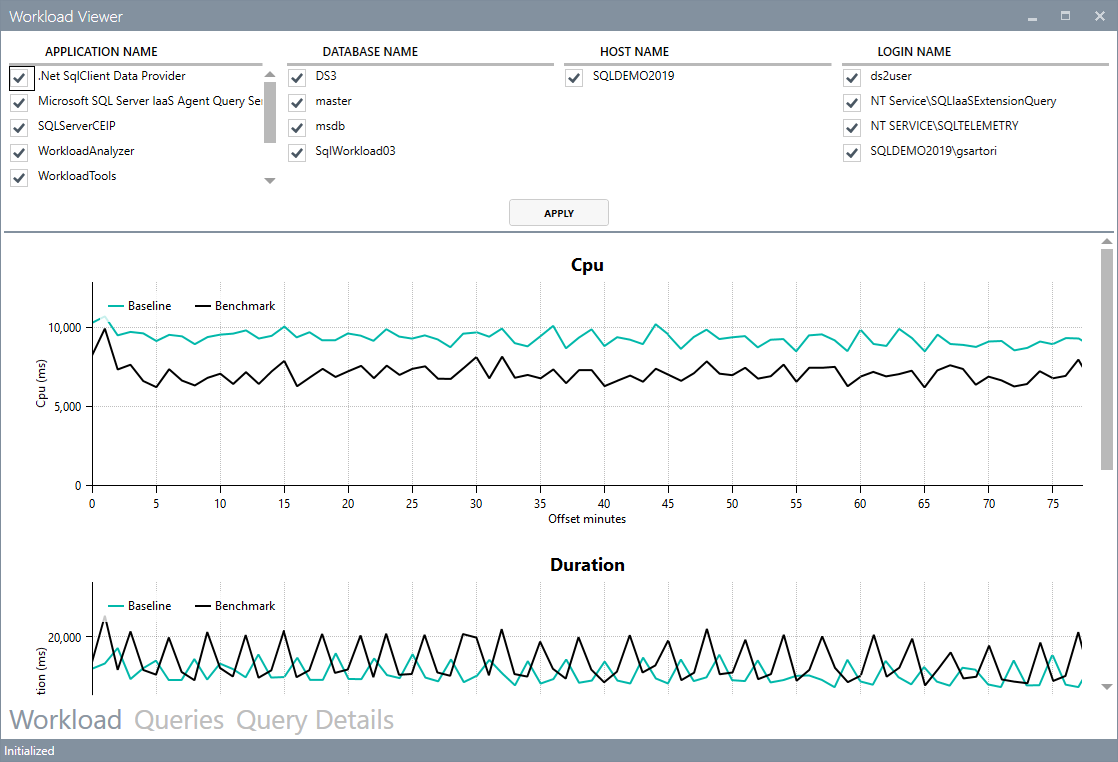

WorkloadViewer will let you visualize performance over time, as we have seen for a single workload analysis, but this time it will be able to show you data from both workloads, so that you can compare them.

The first tab will still contain the charts for total duration, cpu and number of batches per second, with two different series:

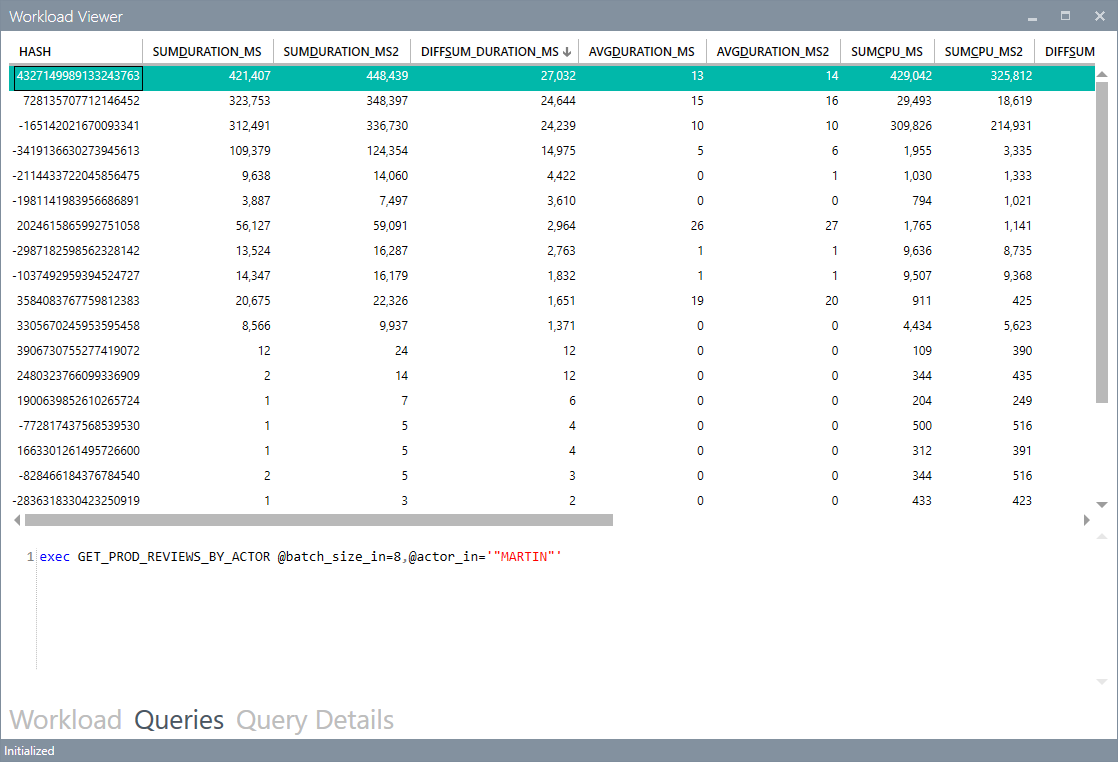

The grid in the second tab will now show performance data by query for both benchmarks, so that you can easily spot regressions sorting by the difference:

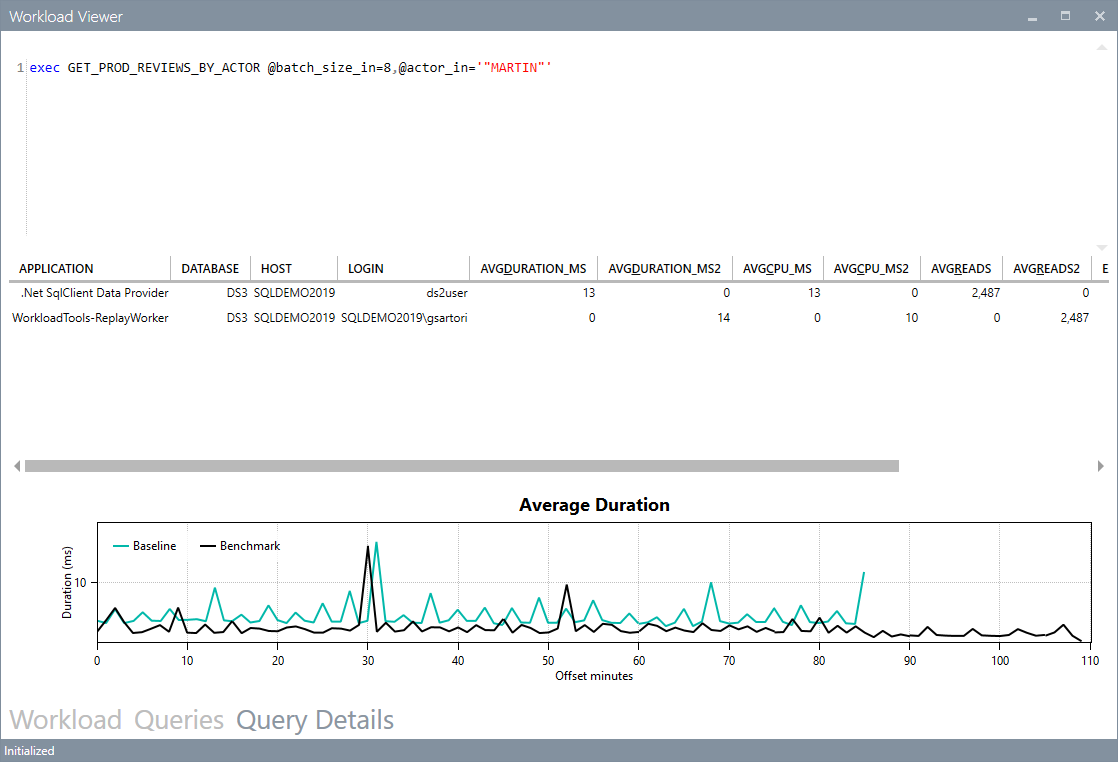

The third tab will show you the details for a single query, with the detail broken down by application, hostname, username and databasename. It will also contain a chart to show you the behavior of the query over time.

Conclusions

Even when replaying a workload, WorkloadTools keep the promise of low complexity and allow you to perform all the activities involved in your benchmarking scenarios.

In the next post I will show you how to leverage the most interesting feature of WorkloadTools: the real-time replay. Stay tuned!

Benchmarking with WorkloadTools

If you ever tried to capture a benchmark on your SQL Server, you probably know that it is a complex operation. Not an impossible task, but definitely something that needs to be planned, timed and studied very thoroughly.

The main idea is that you capture a workload from production, you extract some performance information, then you replay the same workload to one or more environments that you want to put to test, while capturing the same performance information. At the end of the process, you can compare performance under different conditions, identify regressions, avoid unwanted situations and rate your tuning efforts.

A big part of the complexity, let’s face it, comes from the fact that the tools that we have had in our toolbelt so far are complex and suffer from a number of limitations that make this exercise very similar to a hurdle race.

If you want to replay a workload from production to test, you need to be able to capture the workload first. Even before you start, you’re already confronted with a myriad of questions:

- What do you use for this? A server-side trace? Extended events? Profiler maybe?

- Which events do you capture? Which fields?

- How long do you need to run the capture? How much is enough? One hour? One day? One week? One month?

- Can you apply some filters?

- Will you have enough disk space to store the captured data?

Throughout the years, you’ve had multiple tools for capturing workloads, each with its own strengths and limitations:

- Profiler

- GOOD: extremely easy to use

- BAD: non-negligible impact on the server

- Extended Events

- GOOD: lightweight

- BAD: not compatible with older versions of SQLServer

- SQL Trace

- GOOD: less impactful than profiler

- BAD: deprecated

However, capturing the workload is not enough: you need to be able to replay it and analyze/compare the performance data.

But fear not! You have some tools that can help you here:

- RML Utilities

- SQL Nexus

- Distributed Replay

- Database Experimentation Assistant (DEA)

The bad news is that (again) each of these tools has its limitations and hurdles, even if the tin says that any monkey could do it. There is nothing like running ReadTrace.exe or Dreplay.exe against a huge set of trace files, only to have it fail after two hours, without a meaningful error message (true story). Moreover, of all these tools, only Distributed Replay (and DEA, which is built on top of it) support Azure SqlDatabase and Azure Managed instances: if you’re working with Azure, be prepared to forget everything you know about traces and RML Utilities.

Introducing WorkloadTools

Throughout my career, I had to go through the pain of benchmarking often enough to get fed up with all the existing tools and decide to code my own. The result of this endeavor is WorkloadTools: a collection of tools to collect, analyze and replay SQL Server workloads, on premises and in the cloud.

At the moment, the project includes 3 tools:

- SqlWorkload – a command line tool to capture, replay and analyze a workload

- ConvertWorkload – a command line tool to convert existing workloads (traces and extended events) to the format used by SqlWorkload

- WorkloadViewer – a GUI tool to visualize and analyze workload data

SqlWorkload is different from the traditional tools, because it lets you choose the technology for the capture: SqlTrace, Extended Events or a pre-recorded workload file. SqlWorkload also lets you choose the platform that you prefer: it works with older versions of SqlServer (tested from 2008 onwards, but nothing prevents it from running on SqlServer 2000) and newer versions, like 2017 or 2019. But the groundbreaking feature of SqlWorkload is its ability to work with Azure Sql Database Managed Instances and Azure Sql Database, by capturing Extended Events on Azure blob storage.

The capture is performed by a “Listener”, that reads the workload events from the source and forwards them immediately to a collection of “Consumers”, each specialized for performing a particular task on the events that it receives. You have a consumer for replaying the workload, a consumer for saving the workload to a file and a consumer for analyzing the workload to a database.

This flexible architecture allows you to do things differently from the existing tools. The traditional approach to benchmarking has always been:

- capture to one or more files

- analyze the files

- replay and capture

- analyze the files

- compare

SqlWorkload does not force you to save your workload to disk completely before you can start working with it, but it lets you forward the events to any type of consumer as soon as it is captured, thus enabling new types of workflows for your benchmarking activities. With SqlWorkload you are free to analyze the events while capturing, but you can also replay to a target database in real-time, while a second instance of SqlWorkload analyzes the events on the target.

If you’re used to a more traditional approach to benchmarking, you can certainly do things the usual way: you can capture a workload to a file, then use that file as a source for both the workload analysis and the replay. While replaying, you can capture the workload to a second set of files, that you can analyze to extract performance data. Another possibility is to analyze the workload directly while you capture it, writing to a workload file that you can use only for the replay.

As you can see, you have many possibilities and you are free to choose the solution that makes sense the most in your scenario. You may think that all this flexibility comes at the price of simplicity, but you’d be surprised by how easy it is to get started with WorkloadTools. SqlWorkload was designed to be as simple as possible, without having to learn and remember countless command line switches. Instead, it can be controlled by providing parameters in .JSON files, that can be saved, kept around and used as templates for the next benchmark.

For instance, the .JSON configuration file for “SqlWorkload A” in the picture above would look like this:

{

"Controller": {

"Listener":

{

"__type": "ExtendedEventsWorkloadListener",

"ConnectionInfo":

{

"ServerName": "SourceServer",

"DatabaseName": "SourceDatabase",

"UserName": "sa",

"Password": "P4$$w0rd!"

},

"DatabaseFilter": "SourceDatabase"

},

"Consumers":

[

{

"__type": "ReplayConsumer",

"ConnectionInfo":

{

"ServerName": "TargetServer",

"DatabaseName": "TargetDatabase",

"UserName": "sa",

"Password": "Pa$$w0rd!"

}

},

{

"__type": "AnalysisConsumer",

"ConnectionInfo":

{

"ServerName": "AnalysisServer",

"DatabaseName": "AnalysisDatabase",

"SchemaName": "baseline",

"UserName": "sa",

"Password": "P4$$w0rd!"

},

"UploadIntervalSeconds": 60

}

]

}

}

As you can see, SqlWorkload expects very basic information and does not need to set up complex traces or XE sessions: all you have to do is configure what type of Listener to use and its parameters, then you need to specify which Consumers to use and their parameters (mainly connection details and credentials) and SqlWorkload will take care of the rest.

If you need to do control the process in more detail, you can certainly do so: the full list of parameters that you can specify in .JSON files is available in the documentation of SqlWorkload at GitHub.

Once the capture is over and you completely persisted the workload analysis to a database, you can use WorkloadViewer to visualize it. WorkloadViewer will show you charts for Cpu, Duration and Batches/sec, comparing how the two benchmarks performed. You can also use the filters at the top to focus the analysis on a subset of the data or you can zoom and pan on the horizontal axis to select a portion of the workload to analyze.

You can also use the “Queries” tab to see an overview of the individual batches captured in the workload. For each of those batches, you’ll be able to see the text of the queries and you will see stats for cpu, duration, reads, writes and number of executions. Sorting by any of these columns will let you spot immediately the regressions between the baseline and the benchmark and you will know exactly where to start tuning.

If you double click one of the queries, you will go to the Query Details tab, which will show you additional data about the selected query, along with its performance over time:

If WorkloadViewer is not enough for you, the project also includes a PowerBI dashboard that you can use to analyze the data from every angle. Does it look exciting enough? Wait, there’s more…

If you already have a pre-captured workload in any format (SqlTrace or Extended Events) you can use the command line tool ConvertWorkload to create a new workload file in the intermediate format used and understood by SqlWorkload (spoiler: it’s a SqLite database), in order to use it as the source for a WorkloadFileListener. This means that you can feed your existing trace data to the WorkloadTools analysis database, or replay it to a test database, even if the workload was not captured with WorkloadTools in the first place.

We have barely scratched the surface of what WorkloadTools can do: in the next weeks I will post detailed information on how to perform specific tasks with WorkloadTools, like capturing to a workload file or performing a real-time replay. In the meantime, you can read the documentation or you can join me at SqlBits, where I will introduce WorkloadTools during my session.

Stay tuned!

Replaying Workloads with Distributed Replay

A couple of weeks ago I posted a method to convert trace files from the SQL Server 2012 format to the SQL Server 2008 format.

The trick works quite well and the trace file can be opened with Profiler or with ReadTrace from RML Utilities. What doesn’t seem to work just as well is the trace replay with Ostress (another great tool bundled in the RML Utilities).

For some reason, OStress refuses to replay the whole trace file and starts throwing lots of errors.

Some errors are due to the workload I was replaying (it contains CREATE TABLE statements and that can obviuosly work just the first time it is issued), but some others seem to be due to parsing errors, probably because of differences in the trace format between version 11 and 10.

11/20/12 12:30:39.008 [0x00001040] File C:\RML\SQL00063.rml: Parser Error: [Error: 60500][State: 1][Abs Char: 1068][Seq: 0] Syntax error [parse error, expecting `tok_RML_END_RPC'] encountered near 0x0000042C: 6C000D00 0A005700 48004500 52004500 l.....W.H.E.R.E. 0x0000043C: 20005500 6E006900 74005000 72006900 .U.n.i.t.P.r.i. 0x0000044C: 63006500 20002600 6C007400 3B002000 c.e. .&.l.t.;. . 0x0000045C: 24003500 2E003000 30000D00 0A004F00 $.5...0.0.....O. 0x0000046C: 52004400 45005200 20004200 59002000 R.D.E.R. .B.Y. . 0x0000047C: 50007200 6F006400 75006300 74004900 P.r.o.d.u.c.t.I. 0x0000048C: 44002C00 20004C00 69006E00 65005400 D.,. .L.i.n.e.T. 0x0000049C: 6F007400 61006C00 3B000D00 0A003C00 o.t.a.l.;.....<. 0x000004AC: 2F004300 4D004400 3E000D00 0A003C00 /.C.M.D.>.....<. 0x000004BC: 2F004C00 41004E00 47003E00 0D000A00 /.L.A.N.G.>..... 0x000004CC: 11/20/12 12:30:39.010 [0x00001040] File C:\RML\SQL00063.rml: Parser Error: [Error: 110010][State: 100][Abs Char: 1068][Seq: 0] SYNTAX ERROR: Parser is unable to safely recover. Correct the errors and try again.

The error suggests that the two formats are indeed more different than I supposed, thus making the replay with Ostress a bit unrealiable.

Are there other options?

Sure there are! Profiler is another tool that allows replaying the workload, even if some limitations apply. For instance, Profiler cannot be scripted, which is a huge limitation if you are using Ostress in benchmarking script and want to replace it with something else.

That “something else” could actually be the Distributed Replay feature introduced in SQL Server 2012.

Basically, Distributed Replay does the same things that Ostress does and even more, with the nice addition of the possibility to start the replay on multiple machines, thus simulating a workload that resembles more the one found in production.

An introduction to Distributed Replay can be found on Jonathan Kehayias’ blog and I will refrain from going into deep details here: those posts are outstanding and there’s very little I could add to that.

Installing the Distributed Replay feature

The first step for the installation is adding a new user for the distributed replay services. You could actually use separate accounts for the Controller and Client services, but for a quick demo a single user is enough.

The Distributed Replay Controller and Client features must be selected from the Feature Selection dialog of SQLServer setup:

In the next steps of the setup you will also be asked the service accounts to use for the services and on the Replay Client page you will have to enter the controller name and the working directories.

Once the setup is over, you will find two new services in the Server Manager:

After starting the services (first the Controller, then the Client), you can go to the log directories and check in the log files if everything is working.

The two files to check are in the following folders:

- C:\Program Files (x86)\Microsoft SQL Server\110\Tools\DReplayController\Log

- C:\Program Files (x86)\Microsoft SQL Server\110\Tools\DReplayClient\Log

Just to prove one more time that “if something can wrong, it will”, the client log will probably contain an obnoxious error message.

DCOM gotchas

Setting up the distributed replay services can get tricky because of some permissions needed to let the client connect to the controller. Unsurprisingly, the client/controller communication is provided by DCOM, which must be configured correctly.

Without granting the appropriate permissions, in the distributed replay client log file you may find the following message:

2012-11-03 00:43:04:062 CRITICAL [Client Service] [0xC8100005 (6)] Failed to connect controller with error code 0x80070005.

In practical terms, the service account that executes the distributed replay controller service must be granted permissions to use the DCOM class locally and through the network:

- Run dcomcnfg.exe

- Navigate the tree to Console Root, Component Services, Computers, My Computer, DCOM Config, DReplayController

- Right click DReplayController and choose “properties” from the context menu.

- Click the Security tab

- Click the “Launch and Activation Permissions” edit button and grant “Local Activation” and “Remote Activation” permissions to the service account

- Click the “Access Permissions” edit button and grant “Local Access” and “Remote Access” permissions to the service account

- Add the service user account to the “Distributed COM Users” group

- Restart the distributed replay controller and client services

After restarting the services, you will find that the message in the log file has changed:

2012-11-20 14:01:10:783 OPERATIONAL [Client Service] Registered with controller "WIN2012_SQL2012".

Using the Replay feature

Once the services are successfully started, we can now start using the Distributed Replay feature.

The trace file has to meet the same requirements for replay found in Profiler, thus making the “Replay” trace template suitable for the job.

But there’s one more step needed before we can replay the trace file, which cannot be replayed directly. In fact, distributed replay needs to work on a trace stub, obtained preprocessing the original trace file.

The syntax to obtain the stub is the following:

"C:\Program Files (x86)\Microsoft SQL Server\110\Tools\Binn\dreplay.exe" preprocess -i "C:\SomePath\replay_trace.trc" -d "C:\SomePath\preprocessDir"

Now that the trace stub is ready, we can start the replay admin tool from the command line, using the following syntax:

"C:\Program Files (x86)\Microsoft SQL Server\110\Tools\Binn\dreplay.exe" replay -s "targetServerName" -d "C:\SomePath\preprocessDir" -w "list,of,allowed,client,names"

A final word

A comparison of the features found in the different replay tools can be found in the following table:

| Profiler | Ostress | Distributed Replay | |

| Multithreading | YES | YES | YES |

| Debugging | YES | NO | NO |

| Synchronization mode | NO | YES | YES |

| Stress mode | YES | YES | YES |

| Distributed mode | NO | NO | YES |

| Scriptable | NO | YES | YES |

| Input format | Trace | Trace/RML/SQL | Trace |

The Distributed Replay Controller can act as a replacement for Ostress, except for the ability to replay SQL and RML files.

Will we be using RML Utilities again in the future? Maybe: it depends on what Microsoft decides to do with this tool. It’s not unlikely that the Distributed Replay feature will replace the RML Utilities entirely. The tracing feature itself has an unceartain future ahead, with the deprecation in SQL Server 2012. Probably this new feature will disappear in the next versions of SQLServer, or it will be ported to the Extended Events instrastructure, who knows?

One thing is sure: today we have three tools that support replaying trace files and seeing this possibilty disappear in the future would be very disappointing. I’m sure SQL Server will never disappoint us. 🙂

Replay a T-SQL batch against all databases

It’s been quite a lot since I last posted on this blog and I apologize with my readers, both of them :-).

Today I would like to share with you a handy script I coded recently during a SQL Server health check. One of the tools I find immensely valuable for conducting a SQL Server assessment is Glenn Berry’s SQL Server Diagnostic Information Queries. The script contains several queries that can help you collect and analyze a whole lot of information about a SQL Server instance and I use it quite a lot.

The script comes with a blank results spreadsheet, that can be used to save the information gathered by the individual queries. Basically, the spreadsheet is organized in tabs, one for each query and has no preformatted column names, so that you can run the query, select the whole results grid, copy with headers and paste everything to the appropriate tab.

When working with multiple instances, SSMS can help automating this task with multiserver queries. Depending on your SSMS settings, the results of a multiserver query can be merged into a single grid, with an additional column holding the server name.

This feature is very handy, because it lets you run a statement against multiple servers without changing the statement itself.

This works very well for the queries in the first part of Glenn Berry’s script, which is dedicated to instance-level checks. The second part of the script is database-specific and you have to repeat the run+copy+paste process for each database in your instance.

It would be great if there was a feature in SSMS that allowed you to obtain the same results as the multiserver queries, scaled down to the database level. Unfortunately, SSMS has no such feature and the only possible solution is to code it yourself… or borrow my script!

Before rushing to the code, let’s describe briefly the idea behind and the challenges involved.

It would be quite easy to take a single statement and use it with sp_MsForEachDB, but this solution has several shortcomings:

- The results would display as individual grids

- There would be no easy way to determine which results grid belongs to which database

- The statement would have to be surrounded with quotes and existing quotes would have to be doubled, with an increased and unwanted complexity

The ideal tool for this task should simply take a statement and run it against all [user] databases without modifying the statement at all, merge the results in a single result set and add an additional column to hold the database name. Apparently, sp_MSForEachDB, besides being undocumented and potentially nasty, is not the right tool for the job.

That said, the only option left is to capture the statement from its query window, combining a trace, a loopback linked server and various other tricks.

Here’s the code:

-- =============================================

-- Author: Gianluca Sartori - @spaghettidba

-- Create date: 2012-06-26

-- Description: Records statements to replay

-- against all databases.

-- =============================================

CREATE PROCEDURE replay_statements_on_each_db

@action varchar(10) = 'RECORD',

@start_statement_id int = NULL,

@end_statement_id int = NULL

AS

BEGIN

SET NOCOUNT ON;

DECLARE @TraceFile nvarchar(256);

DECLARE @TraceFileNoExt nvarchar(256);

DECLARE @LastPathSeparator int;

DECLARE @TracePath nvarchar(256);

DECLARE @TraceID int;

DECLARE @fs bigint = 5;

DECLARE @r int;

DECLARE @spiid int = @@SPID;

DECLARE @srv nvarchar(4000);

DECLARE @ErrorMessage nvarchar(4000);

DECLARE @ErrorSeverity int;

DECLARE @ErrorState int;

DECLARE @sql nvarchar(max);

DECLARE @statement nvarchar(max);

DECLARE @column_list nvarchar(max);

IF @action NOT IN ('RECORD','STOPRECORD','SHOWQUERY','REPLAY')

RAISERROR('A valid @action (RECORD,STOPRECORD,SHOWQUERY,REPLAY) must be specified.',16,1)

-- *********************************************** --

-- * RECORD * --

-- *********************************************** --

IF @action = 'RECORD'

BEGIN

BEGIN TRY

-- Identify the path of the default trace

SELECT @TraceFile = path

FROM master.sys.traces

WHERE id = 1

-- Split the directory / filename parts of the path

SELECT @LastPathSeparator = MAX(number)

FROM master.dbo.spt_values

WHERE type = 'P'

AND number BETWEEN 1 AND LEN(@tracefile)

AND CHARINDEX('\', @TraceFile, number) = number

--' fix WordPress's sql parser quirks'

SELECT @TraceFile =

SUBSTRING(

@TraceFile

,1

,@LastPathSeparator

)

+ 'REPLAY_'

+ CONVERT(char(8),GETDATE(),112)

+ REPLACE(CONVERT(varchar(8),GETDATE(),108),':','')

+ '.trc'

SET @TraceFileNoExt = REPLACE(@TraceFile,N'.trc',N'')

-- create trace

EXEC sp_trace_create @TraceID OUTPUT, 0, @TraceFileNoExt, @fs, NULL;

--add filters and events

EXEC sp_trace_setevent @TraceID, 41, 1, 1;

EXEC sp_trace_setevent @TraceID, 41, 12, 1;

EXEC sp_trace_setevent @TraceID, 41, 13, 1;

EXEC sp_trace_setfilter @TraceID, 1, 0, 7, N'%fn_trace_gettable%'

EXEC sp_trace_setfilter @TraceID, 1, 0, 7, N'%replay_statements_on_each_db%'

EXEC sp_trace_setfilter @TraceID, 12, 0, 0, @spiid

--start the trace

EXEC sp_trace_setstatus @TraceID, 1

--create a global temporary table to store the statements

IF OBJECT_ID('tempdb..##replay_info') IS NOT NULL

DROP TABLE ##replay_info;

CREATE TABLE ##replay_info (

trace_id int,

statement_id int,

statement_text nvarchar(max)

);

--save the trace id in the global temp table

INSERT INTO ##replay_info (trace_id) VALUES(@TraceID);

END TRY

BEGIN CATCH

--cleanup the trace

IF EXISTS( SELECT 1 FROM sys.traces WHERE id = @TraceId AND status = 1 ) EXEC sp_trace_setstatus @TraceID, 0;

IF EXISTS( SELECT 1 FROM sys.traces WHERE id = @TraceId AND status = 0 ) EXEC sp_trace_setstatus @TraceID, 2;

IF OBJECT_ID('tempdb..##replay_info') IS NOT NULL

DROP TABLE ##replay_info;

SELECT @ErrorMessage = ERROR_MESSAGE(),

@ErrorSeverity = ERROR_SEVERITY(),

@ErrorState = ERROR_STATE();

RAISERROR(@ErrorMessage, @ErrorSeverity, @ErrorState);

END CATCH

END

-- *********************************************** --

-- * STOP RECORDING * --

-- *********************************************** --

IF @action = 'STOPRECORD'

BEGIN

BEGIN TRY

-- gather the trace id

SELECT @TraceID = trace_id

FROM ##replay_info;

IF @TraceId IS NULL

RAISERROR('No data has been recorded!',16,1)

DELETE FROM ##replay_info;

-- identify the trace file

SELECT TOP(1) @TraceFile = path

FROM sys.traces

WHERE path like '%REPLAY[_]______________.trc'

ORDER BY id DESC

-- populate the global temporary table with

-- the statements recorded in the

INSERT INTO ##replay_info

SELECT @TraceID,

ROW_NUMBER() OVER(ORDER BY (SELECT NULL)),

TextData

FROM fn_trace_gettable(@traceFile, DEFAULT)

WHERE TextData IS NOT NULL;

--stop and deltete the trace

IF EXISTS( SELECT 1 FROM sys.traces WHERE id = @TraceId AND status = 1 ) EXEC sp_trace_setstatus @TraceID, 0;

IF EXISTS( SELECT 1 FROM sys.traces WHERE id = @TraceId AND status = 0 ) EXEC sp_trace_setstatus @TraceID, 2;

END TRY

BEGIN CATCH

--stop and deltete the trace

IF EXISTS( SELECT 1 FROM sys.traces WHERE id = @TraceId AND status = 1 ) EXEC sp_trace_setstatus @TraceID, 0;

IF EXISTS( SELECT 1 FROM sys.traces WHERE id = @TraceId AND status = 0 ) EXEC sp_trace_setstatus @TraceID, 2;

SELECT @ErrorMessage = ERROR_MESSAGE(),

@ErrorSeverity = ERROR_SEVERITY(),

@ErrorState = ERROR_STATE();

RAISERROR(@ErrorMessage, @ErrorSeverity, @ErrorState);

END CATCH

END

-- *********************************************** --

-- * SHOW COLLECTED QUERIES * --

-- *********************************************** --

IF @action = 'SHOWQUERY'

BEGIN

BEGIN TRY

IF OBJECT_ID('tempdb..##replay_info') IS NULL

RAISERROR('No data has been recorded yet',16,1);

SET @sql = 'SELECT statement_id, statement_text FROM ##replay_info ';

IF @start_statement_id IS NOT NULL AND @end_statement_id IS NULL

SET @sql = @sql + ' WHERE statement_id = @start_statement_id ';

IF @start_statement_id IS NOT NULL AND @end_statement_id IS NOT NULL

SET @sql = @sql + ' WHERE statement_id

BETWEEN @start_statement_id AND @end_statement_id';

EXEC sp_executesql

@sql

,N'@start_statement_id int, @end_statement_id int'

,@start_statement_id

,@end_statement_id;

END TRY

BEGIN CATCH

SELECT @ErrorMessage = ERROR_MESSAGE(),

@ErrorSeverity = ERROR_SEVERITY(),

@ErrorState = ERROR_STATE();

RAISERROR(@ErrorMessage, @ErrorSeverity, @ErrorState);

END CATCH

END

-- *********************************************** --

-- * REPLAY * --

-- *********************************************** --

IF @action = 'REPLAY'

BEGIN

BEGIN TRY

--load the selected statement(s)

SET @statement = '

SET @sql = ''''

SELECT @sql += statement_text + '' ''

FROM ##replay_info

';

IF @start_statement_id IS NOT NULL AND @end_statement_id IS NULL

SET @statement =

@statement

+ ' WHERE statement_id = @start_statement_id ';

IF @start_statement_id IS NOT NULL AND @end_statement_id IS NOT NULL

SET @statement =

@statement

+ ' WHERE statement_id

BETWEEN @start_statement_id AND @end_statement_id';

EXEC sp_executesql

@statement

,N'@start_statement_id int, @end_statement_id int, @sql nvarchar(max) OUTPUT'

,@start_statement_id

,@end_statement_id

,@sql OUTPUT;

IF NULLIF(LTRIM(@sql),'') IS NULL

RAISERROR('Unable to locate the statement(s) specified.',16,1)

SET @srv = @@SERVERNAME; -- gather this server name

IF EXISTS (SELECT * FROM sys.servers WHERE name = 'TMPLOOPBACK')

EXEC sp_dropserver 'TMPLOOPBACK';

-- Create a loopback linked server

EXEC master.dbo.sp_addlinkedserver

@server = N'TMPLOOPBACK',

@srvproduct = N'SQLServ', -- it’s not a typo: it can’t be “SQLServer”

@provider = N'SQLNCLI', -- change to SQLOLEDB for SQLServer 2000

@datasrc = @srv;

-- Set the authentication to "current security context"

EXEC master.dbo.sp_addlinkedsrvlogin

@rmtsrvname = N'TMPLOOPBACK',

@useself = N'True',

@locallogin = NULL,

@rmtuser = NULL,

@rmtpassword = NULL;

-- Use a permanent table in Tempdb to store the output

IF OBJECT_ID('tempdb..___outputTable') IS NOT NULL

DROP TABLE tempdb..___outputTable;

-- Execute the statement in Tempdb to discover the column definition

SET @statement = '

SELECT TOP(0) *

INTO tempdb..___outputTable

FROM OPENQUERY(TMPLOOPBACK,''

SET FMTONLY OFF; EXEC tempdb.sys.sp_executesql N''''' + REPLACE(@sql,'''','''''''''') + '''''

'')

';

EXEC(@statement);

SET @statement = @sql;

-- Build the column list of the output table

SET @column_list = STUFF((

SELECT ',' + QUOTENAME(C.name)

FROM tempdb.sys.columns AS C

INNER JOIN tempdb.sys.tables AS T

ON C.object_id = T.object_id

WHERE T.name = '___outputTable'

FOR XML PATH('')

),1,1,SPACE(0));

-- Add a "Database Name" column

ALTER TABLE tempdb..___outputTable ADD Database__Name sysname;

-- Build a sql statement to execute

-- the recorded statement against all databases

SET @sql =

'N''INSERT tempdb..___outputTable(' + @column_list + ') EXEC(@statement); UPDATE tempdb..___outputTable SET Database__Name = DB_NAME() WHERE Database__Name IS NULL;''';

-- Build a statement to execute on each database context

;WITH dbs AS (

SELECT *,

system_db = CASE WHEN name IN ('master','model','msdb','tempdb') THEN 1 ELSE 0 END

FROM sys.databases

WHERE DATABASEPROPERTY(name, 'IsSingleUser') = 0

AND HAS_DBACCESS(name) = 1

AND state_desc = 'ONLINE'

)

SELECT @sql = (

SELECT

'EXEC ' + QUOTENAME(name) + '.sys.sp_executesql ' +

@sql + ',' +

'N''@statement nvarchar(max)'',' +

'@statement;' + char(10) AS [text()]

FROM dbs

ORDER BY name

FOR XML PATH('')

);

-- Execute multi-db sql and pass in the actual statement

EXEC sp_executeSQL @sql, N'@statement nvarchar(max)', @statement

--

SET @sql = '

SELECT Database__Name AS [Database Name], ' + @column_list + '

FROM tempdb..___outputTable

ORDER BY 1;

'

EXEC sp_executesql @sql;

EXEC tempdb.sys.sp_executesql N'DROP TABLE ___outputTable';

END TRY

BEGIN CATCH

SELECT @ErrorMessage = ERROR_MESSAGE(),

@ErrorSeverity = ERROR_SEVERITY(),

@ErrorState = ERROR_STATE();

RAISERROR(@ErrorMessage, @ErrorSeverity, @ErrorState);

END CATCH

END

END

As you can see, the code creates a stored procedure that accepts a parameter named @action, which is used to determine what the procedure should do. Specialized sections of the procedure handle every possible value for the parameter, with the following logic:

First of all you start recording, then you execute the statements to repeat on each database, then you stop recording. From that moment on, you can enumerate the statements captured and execute them, passing a specific statement id or a range of ids.

The typical use of the procedure could look like this:

-- start recording

EXECUTE replay_statements_on_each_db

@action = 'RECORD'

-- run the statements you want to replay

SELECT DATABASEPROPERTYEX(DB_NAME(),'Recovery') AS RecoveryModel

-- stop recording

EXECUTE replay_statements_on_each_db

@action = 'STOPRECORD'

-- display captured statements

EXECUTE replay_statements_on_each_db

@action = 'SHOWQUERY'

-- execute the first statement

EXECUTE replay_statements_on_each_db

@action = 'REPLAY',

@start_statement_id = 1,

@end_statement_id = 1

You can see the results of the script execution here:

Obviuosly this approach is totally overkill for just selecting the database recovery model, but it can become very handy when the statement’s complexity raises.

This seems a perfect fit for Glen Berry’s diagnostic queries, which is where we started from. You can go back to that script and add the record instructions just before the database specific queries start:

At the end of the script you can add the instructions to stop recording and show the queries captured by the procedure.

Once the statements are recorded, you can run any of the statements against all databases. For instance, I decided to run the top active writes index query (query 51).

As expected, the procedure adds the database name column to the result set and then displays the merged results.

You may have noticed that I skipped the first statement in the database-specific section of the script, which is a DBCC command. Unfortunately, not all kind of statement can be captured with this procedure, because some limitations apply. Besides the inability to capture some DBCC commands, please note that the column names must be explicitly set.

I think that a CLR procedure could overcome these limitations, or at least some of them. I hope I will find the time to try the CLR method soon and I promise I will blog the results.